(With spark-2.1.0-bin-hadoop2.7 version from the official website on local machine)

When I executed a simple spark command in spark-shell, it starts to print out thousands and thousands lines of code before throwing an error. What are these "code"?

I was running spark on my local machine. The command I ran was a simple df.count where df is a DataFrame.

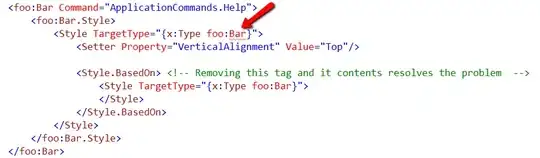

Please see a screenshot below (the codes fly by so fast I could only take screenshots to see what's going on). More details are below the image.

More details:

I created the data frame df by

val df: DataFrame = spark.createDataFrame(rows, schema)

// rows: RDD[Row]

// schema: StructType

// There were about 3000 columns and 700 rows (testing set) of data in df.

// The following line ran successfully and returned the correct value

rows.count

// The following line threw exception after printing out tons of codes as shown in the screenshot above

df.count

The exception thrown after the "codes" is:

...

/* 181897 */ apply_81(i);

/* 181898 */ result.setTotalSize(holder.totalSize());

/* 181899 */ return result;

/* 181900 */ }

/* 181901 */ }

at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$.org$apache$spark$sql$catalyst$expressions$codegen$CodeGenerator$$doCompile(CodeGenerator.scala:889)

at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$$anon$1.load(CodeGenerator.scala:941)

at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$$anon$1.load(CodeGenerator.scala:938)

at org.spark_project.guava.cache.LocalCache$LoadingValueReference.loadFuture(LocalCache.java:3599)

at org.spark_project.guava.cache.LocalCache$Segment.loadSync(LocalCache.java:2379)

at org.spark_project.guava.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2342)

at org.spark_project.guava.cache.LocalCache$Segment.get(LocalCache.java:2257)

... 29 more

Caused by: org.codehaus.janino.JaninoRuntimeException: Code of method "(Lorg/apache/spark/sql/catalyst/expressions/GeneratedClass;[Ljava/lang/Object;)V" of class "org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificUnsafeProjection" grows beyond 64 KB

at org.codehaus.janino.CodeContext.makeSpace(CodeContext.java:941)

at org.codehaus.janino.CodeContext.write(CodeContext.java:854)

at org.codehaus.janino.CodeContext.writeShort(CodeContext.java:959)

Edit: As @TzachZohar pointed out, this looks like one of the known bugs (https://issues.apache.org/jira/browse/SPARK-16845) that was fixed but not released from the spark project.

I pulled the spark master, built it from the source, and retried my example. Now I got a new exception following the generated code:

/* 308608 */ apply_1560(i);

/* 308609 */ apply_1561(i);

/* 308610 */ result.setTotalSize(holder.totalSize());

/* 308611 */ return result;

/* 308612 */ }

/* 308613 */ }

at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$.org$apache$spark$sql$catalyst$expressions$codegen$CodeGenerator$$doCompile(CodeGenerator.scala:941)

at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$$anon$1.load(CodeGenerator.scala:998)

at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$$anon$1.load(CodeGenerator.scala:995)

at org.spark_project.guava.cache.LocalCache$LoadingValueReference.loadFuture(LocalCache.java:3599)

at org.spark_project.guava.cache.LocalCache$Segment.loadSync(LocalCache.java:2379)

at org.spark_project.guava.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2342)

at org.spark_project.guava.cache.LocalCache$Segment.get(LocalCache.java:2257)

... 29 more

Caused by: org.codehaus.janino.JaninoRuntimeException: Constant pool for class org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificUnsafeProjection has grown past JVM limit of 0xFFFF

at org.codehaus.janino.util.ClassFile.addToConstantPool(ClassFile.java:499)

It looks like a pull request is addressing the second problem: https://github.com/apache/spark/pull/16648