I was using a dictionary as a lookup table but I started to wonder if a list would be better for my application -- the amount of entries in my lookup table wasn't that big. I know lists use C arrays under the hood which made me conclude that lookup in a list with just a few items would be better than in a dictionary (accessing a few elements in an array is faster than computing a hash).

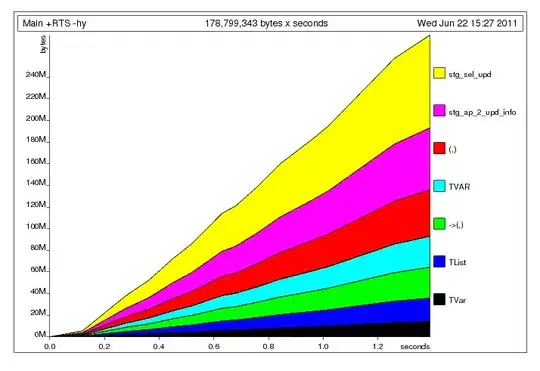

I decided to profile the alternatives but the results surprised me. List lookup was only better with a single element! See the following figure (log-log plot):

So here comes the question: Why do list lookups perform so poorly? What am I missing?

On a side question, something else that called my attention was a little "discontinuity" in the dict lookup time after approximately 1000 entries. I plotted the dict lookup time alone to show it.

p.s.1 I know about O(n) vs O(1) amortized time for arrays and hash tables, but it is usually the case that for a small number of elements iterating over an array is better than to use a hash table.

p.s.2 Here is the code I used to compare the dict and list lookup times:

import timeit

lengths = [2 ** i for i in xrange(15)]

list_time = []

dict_time = []

for l in lengths:

list_time.append(timeit.timeit('%i in d' % (l/2), 'd=range(%i)' % l))

dict_time.append(timeit.timeit('%i in d' % (l/2),

'd=dict.fromkeys(range(%i))' % l))

print l, list_time[-1], dict_time[-1]

p.s.3 Using Python 2.7.13