I'd like to understand Spark DAG model according to the official Spark docs. All transformations in Spark are lazy and by default, each transformed RDD may be recomputed each time you run an action on it. So I wrote a small program like below:

scala> val lines = sc.textFile("C:\\Spark\\README.md")

lines: org.apache.spark.rdd.RDD[String] = C:\Spark\README.md MapPartitionsRDD[1] at textFile at <console>:24

scala> val breakLInes = lines.flatMap(line=>line.split(" "))

breakLInes: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[2] at flatMap at <console>:26

scala> val createTuple = breakLInes.map(line=>(line,1))

createTuple: org.apache.spark.rdd.RDD[(String, Int)] = MapPartitionsRDD[3] at map at <console>:28

scala> val wordCount = createTuple.reduceByKey

reduceByKey reduceByKeyLocally

scala> val wordCount = createTuple.reduceByKey(_+_)

wordCount: org.apache.spark.rdd.RDD[(String, Int)] = ShuffledRDD[4] at reduceByKey at <console>:30

scala> wordCount.first

res0: (String, Int) = (package,1)

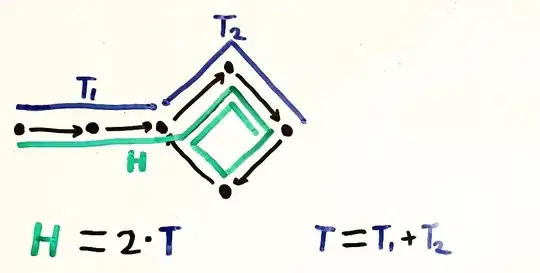

Now moving to spark UI below is my DAG Visualization for the first action:

Again executed:

scala> wordCount.first

res0: (String, Int) = (package,1)

Now moving to spark UI below is my DAG Visualization for the second action:

By default, each transformed RDD may be recomputed each time you run an action on it. Then why it is skipping stage 2 it should have again calculated stage 2 as no caching has been done?