The existing answers here and elsewhere were an excellent starting point, but I found they needed some tweaking to work with Tensorflow 2.x and keras flow_from_directory*. This is what I came up with.

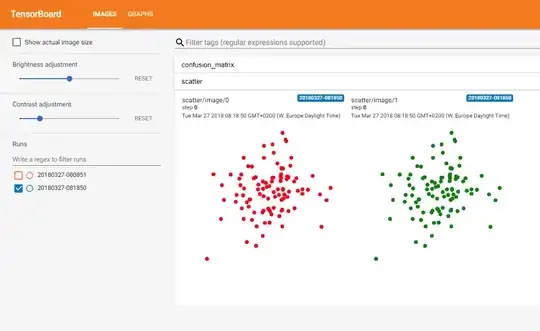

My aim was to verify the data augmentation process, so the images I have written to tensorboard are the augmented training data. That's not exactly what the OP wanted. They would have to change on_batch_end to on_epoch_end and access the model outputs (which is something I haven't looked into, but I'm sure it's possible.)

Similar to Fabio Perez's answer with the astronaut, you will be able to scroll through the epochs by dragging the orange slider, showing differently augmented copies of each image that has been written to tensorboard. Careful with large datasets trained over many epochs. Since this routine saves a copy of every 1000th image in every epoch, you might end up with a large tfevents file.

the callback function, saved as tensorflow_image_callback.py

import tensorflow as tf

import math

class TensorBoardImage(tf.keras.callbacks.Callback):

def __init__(self, logdir, train, validation=None):

super(TensorBoardImage, self).__init__()

self.logdir = logdir

self.train = train

self.validation = validation

self.file_writer = tf.summary.create_file_writer(logdir)

def on_batch_end(self, batch, logs):

images_or_labels = 0 #0=images, 1=labels

imgs = self.train[batch][images_or_labels]

#calculate epoch

n_batches_per_epoch = self.train.samples / self.train.batch_size

epoch = math.floor(self.train.total_batches_seen / n_batches_per_epoch)

#since the training data is shuffled each epoch, we need to use the index_array to find something which uniquely

#identifies the image and is constant throughout training

first_index_in_batch = batch * self.train.batch_size

last_index_in_batch = first_index_in_batch + self.train.batch_size

last_index_in_batch = min(last_index_in_batch, len(self.train.index_array))

img_indices = self.train.index_array[first_index_in_batch : last_index_in_batch]

#convert float to uint8, shift range to 0-255

imgs -= tf.reduce_min(imgs)

imgs *= 255 / tf.reduce_max(imgs)

imgs = tf.cast(imgs, tf.uint8)

with self.file_writer.as_default():

for ix,img in enumerate(imgs):

img_tensor = tf.expand_dims(img, 0) #tf.summary needs a 4D tensor

#only post 1 out of every 1000 images to tensorboard

if (img_indices[ix] % 1000) == 0:

#instead of img_filename, I could just use str(img_indices[ix]) as a unique identifier

#but this way makes it easier to find the unaugmented image

img_filename = self.train.filenames[img_indices[ix]]

tf.summary.image(img_filename, img_tensor, step=epoch)

integrate it with your training like this:

train_augmentation = keras.preprocessing.image.ImageDataGenerator(rotation_range=20,

shear_range=10,

zoom_range=0.2,

width_shift_range=0.2,

height_shift_range=0.2,

brightness_range=[0.8, 1.2],

horizontal_flip=False,

vertical_flip=False

)

train_data_generator = train_augmentation.flow_from_directory(directory='/some/path/train/',

class_mode='categorical',

batch_size=batch_size,

shuffle=True

)

valid_augmentation = keras.preprocessing.image.ImageDataGenerator()

valid_data_generator = valid_augmentation.flow_from_directory(directory='/some/path/valid/',

class_mode='categorical',

batch_size=batch_size,

shuffle=False

)

tensorboard_log_dir = '/some/path'

tensorboard_callback = keras.callbacks.TensorBoard(log_dir=tensorboard_log_dir, update_freq='batch')

tensorboard_image_callback = tensorflow_image_callback.TensorBoardImage(logdir=tensorboard_log_dir, train=train_data_generator, validation=valid_data_generator)

model.fit(x=train_data_generator,

epochs=n_epochs,

validation_data=valid_data_generator,

validation_freq=1,

callbacks=[

tensorboard_callback,

tensorboard_image_callback

])

*I later realised that flow_from_directory has an option save_to_dir which would have been sufficient for my purposes. Simply adding that option is much simpler, but using a callback like this has additional features of displaying the images in Tensorboard, where multiple versions of the same image can be compared, and allowing the number of saved images to be customised. save_to_dir saves a copy of every single augmented image, which quickly adds up to a lot of space.