I've been exploring query optimizations in the recent releases of Spark SQL 2.3.0-SNAPSHOT and noticed different physical plans for semantically-identical queries.

Let's assume I've got to count the number of rows in the following dataset:

val q = spark.range(1)

I could count the number of rows as follows:

q.countq.collect.sizeq.rdd.countq.queryExecution.toRdd.count

My initial thought was that it's almost a constant operation (surely due to a local dataset) that would somehow have been optimized by Spark SQL and would give a result immediately, esp. the 1st one where Spark SQL is in full control of the query execution.

Having had a look at the physical plans of the queries led me to believe that the most effective query would be the last:

q.queryExecution.toRdd.count

The reasons being that:

- It avoids deserializing rows from their

InternalRowbinary format - The query is codegened

- There's only one job with a single stage

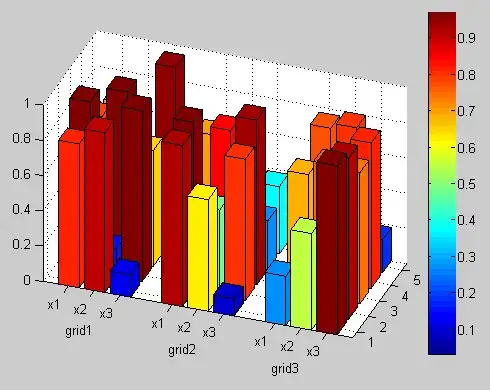

The physical plan is as simple as that.

Is my reasoning correct? If so, would the answer be different if I read the dataset from an external data source (e.g. files, JDBC, Kafka)?

The main question is what are the factors to take into consideration to say whether a query is more efficient than others (per this example)?

The other execution plans for completeness.