HashMap contains a certain number of buckets. It uses hashCode to determine which bucket to put these into. For simplicity's sake imagine it as a modulus.

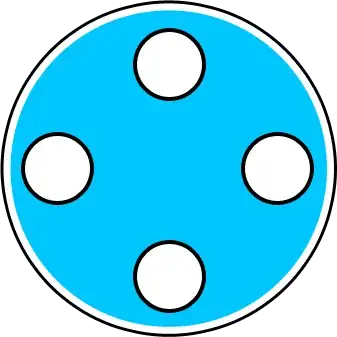

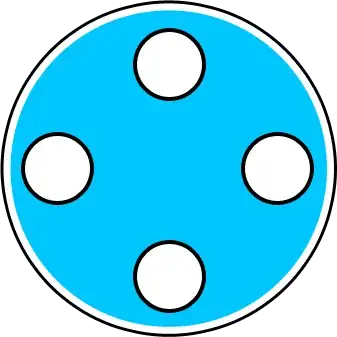

If our hashcode is 123456 and we have 4 buckets, 123456 % 4 = 0 so the item goes in the first bucket, Bucket 1.

If our hashCode function is good, it should provide an even distribution so that all the buckets will be used somewhat equally. In this case, the bucket uses a linked list to store the values.

But you can't rely on people to implement good hash functions. People will often write poor hash functions which will result in a non-even distribution. It's also possible that we could just get unlucky with our inputs.

The less even this distribution is, the further we're moving from O(1) operations and the closer we're moving towards O(n) operations.

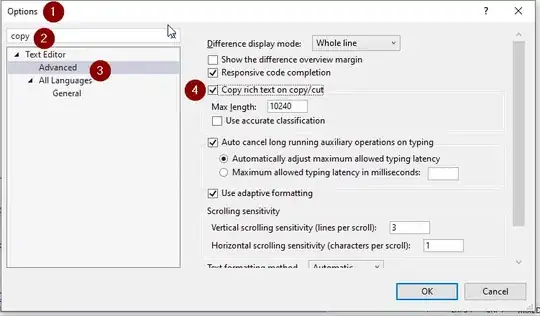

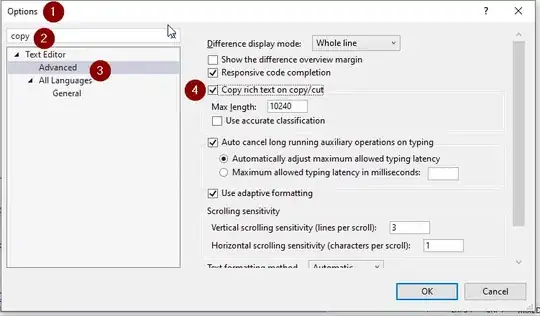

The implementation of HashMap tries to mitigate this by organising some buckets into trees rather than linked lists if the buckets become too large. This is what TREEIFY_THRESHOLD = 8 is for. If a bucket contains more than eight items, it should become a tree.

This tree is a Red-Black tree, presumably chosen because it offers some worst-case guarantees. It is first sorted by hash code. If the hash codes are the same, it uses the compareTo method of Comparable if the objects implement that interface, else the identity hash code.

If entries are removed from the map, the number of entries in the bucket might reduce such that this tree structure is no longer necessary. That's what the UNTREEIFY_THRESHOLD = 6 is for. If the number of elements in a bucket drops below six, we might as well go back to using a linked list.

Finally, there is the MIN_TREEIFY_CAPACITY = 64.

When a hash map grows in size, it automatically resizes itself to have more buckets. If we have a small HashMap, the likelihood of us getting very full buckets is quite high, because we don't have that many different buckets to put stuff into. It's much better to have a bigger HashMap, with more buckets that are less full. This constant basically says not to start making buckets into trees if our HashMap is very small - it should resize to be larger first instead.

To answer your question about the performance gain, these optimisations were added to improve the worst case. You would probably only see a noticeable performance improvement because of these optimisations if your hashCode function was not very good.

It is designed to protect against bad hashCode implementations and also provides basic protection against collision attacks, where a bad actor may attempt to slow down a system by deliberately selecting inputs which occupy the same buckets.