How to compare two spectrograms and score their similarity? How to pick the whole model/approach?

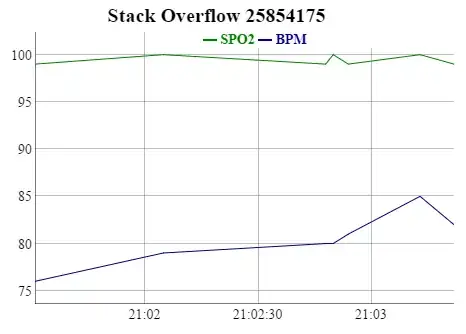

Recordings from phone I convert from .m4a to .wav then plot the spectrogram in Python. Recordings have same length so data can be represented in the same dimensional space. I filtered using Butterworth bandpass filter (cutoff frequency 400Hz and 3500Hz):

To find region of interest, using OpenCV I filtered color (will make every clip different length which I don't want that):

Embedding spectrograms to multidimensional points and score their accuracy as distance to the most accurate sample would be visualisable thanks to dimensionality reduction in some cluster-like space. But that seems too plain, doesn't involve training and thus making it hard to verify. How to use convolution neural networks or a combination of convolution neural network and delayed neural network to embed this spectrogram to multidimensional points, to compare output of the network instead?

I switched to the Mel spectrogram:

How to use pre-trained convolution neural network models like VGG16 to embed spectrograms to tensors to compare them? Just remove last fully connected layer and flatten it instead?