I am looking for the most memory efficient way to concatenate an Int 32 and Datetime column to create a 3rd column. I have two columns in a Dataframe an int32 and a datetime64. I want to create a 3rd column which will .

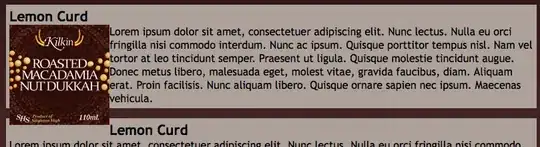

The dataframe looks like this:

What I want is:

I have created a test data frame as follows:

import pandas as pd

import numpy as np

import sys

import datetime as dt

%load_ext memory_profiler

np.random.seed(42)

df_rows = 10**6

todays_date = dt.datetime.now().date()

dt_array = pd.date_range(todays_date - dt.timedelta(2*365), periods=2*365, freq='D')

cust_id_array = np.random.randint(100000,999999,size=(100000, 1))

df = pd.DataFrame({'cust_id':np.random.choice(cust_id_array.flatten(),df_rows,replace=True)

,'tran_dt':np.random.choice(dt_array,df_rows,replace=True)})

df.info()

The dataframe statistics as-is before concatenation are:

I have used both map and astype to concatenate but the memory usage is still quite high:

%memit -r 1 df['comb_key'] = df["cust_id"].map(str) + '----' + df["tran_dt"].map(str)

%memit -r 1 df['comb_key'] = df["cust_id"].astype(str) + '----' + df["tran_dt"].astype(str)

%memit -r 1 df['comb_key'] = df.apply(lambda x: str(str(x['cust_id']) \

+ '----' + dt.datetime.strftime(x['tran_dt'],'%Y-%m-%d')), axis=1)

The memory usage for the 3 are:

Is there a more memory efficient way of doing this? My real life data sets are about 1.8 GB's uncompressed on a machine with 16GB RAM.