Are there any persistent data structures implementations in c++ similar to those in clojure?

-

1C++ is not garbage collected, which makes building such structures exceedingly complicated. If you don't care about memory leaks (or have integrated a garbage collector), then it's easy. – Greg Hewgill Dec 09 '10 at 02:53

-

2Wow, cool down, brother. Looking up "Clojure" is not that difficult; and I generally don't boast of not having heard of a language that's at the forefront of functional programming. I'm not a big fan of it, but I find it odd that a question should be disparaged for mentioning it. (Though I heartily agree with your suggestion to reevaluate needs, rather than shoehorn another language's style into C++). – Jonathan Sterling Dec 09 '10 at 04:37

-

related: http://stackoverflow.com/questions/2757278/functional-data-structures-in-c , http://stackoverflow.com/questions/303426/efficient-persistent-data-structures-for-relational-database – amit kumar Feb 27 '11 at 16:54

-

1Persistent data structures can't create cycles. E.g. if you create `A`, then you can create `B` pointing to `A`, but you'll need to modify `A` to create a cycle. Persistent data structures are immutable, so a simple ref. counting should be sufficient to implement them in C++. – ivant Sep 08 '13 at 21:09

3 Answers

I rolled my own but there's the immer library as a fairly comprehensive example and it is specifically inspired by clojure. I got all excited and rolled my own a few years back after listening to a speech by John Carmack where he was jumping all over the functional programming bandwagon. He seemed to be able to imagine a game engine revolving around immutable data structures. While he didn't get into specifics and while it just seemed like a hazy idea in his head, the fact that he was seriously considering it and didn't seem to think the overhead would cut steeply into frame rates was enough to get me excited about exploring that idea.

I actually use it as somewhat of an optimization detail which might seem paradoxical (there is overhead to immutability), but I mean in a specific context. If I absolutely want to do this:

// We only need to change a small part of this huge data structure.

HugeDataStructure transform(HugeDataStructure input);

... and I absolutely don't want the function to cause side effects so that it can be thread-safe and never prone to misuse, then I have no choice but to copy the huge data structure (which might span a gigabyte).

And there I find having a small library of immutable data structures immensely helpful in such a context, since it makes the above scenario relatively cheap by just shallow copying and referencing unchanged parts. That said, I mostly just use one immutable data structure which is basically a random-access sequence, like so:

And as others mentioned, it did require some care and tuning and comprehensive testing and many VTune sessions to make it thread-safe and efficient, but after I put in the elbow grease, it definitely made things a whole, whole lot easier.

On top of automatic thread safety whenever we use these structures to write functions to be free of side effects, you also get things like non-destructive editing, trivial undo systems, trivial exception-safety (no need to roll back side effects through scope guards in a function which causes none in exceptional paths), and letting users copy and paste data and instance it without taking much memory until/unless they modify what they paste as a bonus. I actually found these bonuses to be even more useful on a daily basis than the thread safety.

I use 'transients' (aka 'builders') as a way to express changes to the data structure, like so:

Immutable transform(Immutable input)

{

Transient transient(input);

// make changes to mutable transient.

...

// Commit the changes to get a new immutable

// (this does not touch the input).

return transient.commit();

}

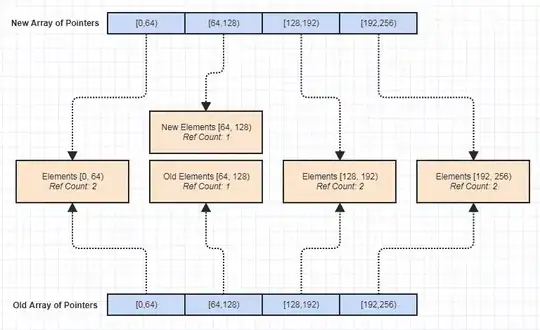

I even have an immutable image library which I use for image editing to trivialize non-destructing editing. It uses a similar strategy to the above structure by treating images as tiles like so:

When a transient is modified and we get a new immutable, only the changed parts are made unique. The rest of the tiles are shallow copied (just 32-bit indices):

I do use these in fairly performance-critical areas like mesh and video processing. There was a bit of fine-tuning as to how much data each block should store (too much and we waste processing and memory deep copying too much data, too little and we waste processing and memory shallow copying too many pointers with more frequent thread locks).

I don't use these for raytracing since that's one of the most extreme performance-critical areas imaginable and the tiniest bit of overhead is noticeable to users (they actually benchmark and notice performance differences in the range of 2%), but most of the time, they're efficient enough, and it's a pretty awesome benefit when you can copy these huge data structures around as a whole left and right to simplify thread safety, undo systems, non-destructive editing, etc, without worrying about explosive memory usage and noticeable delays spent deep copying everything.

The main difficulty to get a persistent data-structure is indeed the lack of garbage collection.

If you don't have a proper garbage collection scheme, then you can get a poor one (namely reference counting), but this mean that you need to take extra care NOT to create cyclic references.

It changes the very core of the structure. For example, think binary tree. If you create a new version of a node, then you need a new version of its parent to access it (etc...). Now, if the relation is two-way (child <-> parent) then you will in fact duplicate the whole structure. This means that you will either have a parent -> child relation, or the opposite (less common).

I can think of implementing a binary tree, or a B-Tree. I hardly see how to get a proper array for example.

On the other hand, I agree it would be great to have efficient ones in multi-threaded environments.

- 287,565

- 48

- 449

- 722

-

1Cyclic references are not a problem within the data structure (the binary tree, in your example). In a persistent collection, nodes CANNOT have a reference to their parent; if they did, it would mean that the entire tree would be invalidated on every write. – Joshua Warner Oct 15 '13 at 17:08

-

@JoshuaWarner: Agreed, I talk about in the very next paragraph but did not take it to its evident conclusion: no cycle is possible (if well built) and without cycles a `shared_ptr` scheme is completely sufficient and there is no need for a full-blown garbage collector. – Matthieu M. Oct 15 '13 at 18:17

If I understand the question correctly, what you seek is the ability to duplicate an object without actually paying for the duplication when it is done, only when it is needed. Changes to either object can be done without damaging the other one. This is known as "copy on write".

If this is what you are looking for, this can fairly easily be implemented in C++ using shared pointers (see shared_ptr from Boost, as one implementation). Initially the copy would share everything with the source, but once changes are made the relevant parts of the object shared pointers are replaced by other shared pointers pointing to newly created, deep-copied objects. (I realize that this explanation is vague - if this is indeed what you mean, the answer can be elaborated).

- 6,845

- 2

- 24

- 16

-

Copy-on-write are functionally what @Miguel is looking for - but it leads to collections that perform poorly on repeated writes. – Joshua Warner Oct 15 '13 at 17:07

-

It is the "deep copy" in copy-on-write that makes it unsuitable for using in functional programming. Data structures in functional programming are persistent data structures. Since they are immutable, if you want to "modify" it, a new "copy" (new version) is made, and the old version is still available. It will be a waste of memory for making whole copies when only part of the structure is different (eg. the "head" of a list). Instead, the two versions share the common part (eg. the "tail" of the list). – Siu Ching Pong -Asuka Kenji- Mar 04 '14 at 23:27