Purely functional data structures have the following advantages:

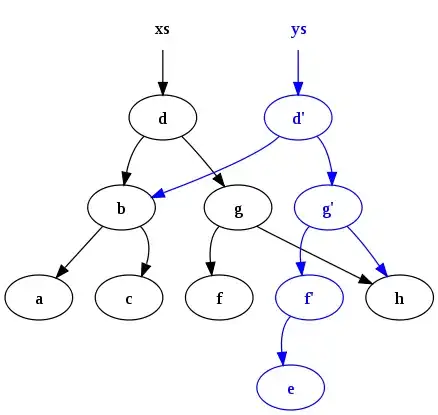

Persistence: old versions can be reused safe in the knowledge that they cannot have been changed.

Sharing: many versions of a data structure can be kept simultaneously with only modest memory requirements.

Thread safety: any mutation is hidden inside the lazy thunks (if any) and, therefore, handled by the language implementation.

Simplicity: not having to keep track of state changes makes purely functional data structures simpler to use, particularly in the context of concurrency.

Incrementality: purely functional data structures are composed of many tiny parts, making them ideal for incremental garbage collection leading to lower latencies.

Note that I have not listed parallelism as an advantage of purely functional data structures because I do not believe this to be the case. Efficient multicore parallelism requires predictable locality in order to leverage caches and avoid getting bottlenecked on shared access to main memory and purely functional data structures have, at best, unknown characteristics in this regard. Consequently, many programs that use purely functional data structures do not scale well when parallelized on a multicore because they spend all of their time in cache misses, contending for shared memory pathways.

What I mean by purely functional data structure is not the same as persistent data structure.

There is some confusion here. In the context of purely functional data structures, persistence is a term used to refer to the ability to refer back to previous versions of a data structure safe in the knowledge that they are still valid. This is a natural result of being purely functional and, therefore, persistence is an inherent characteristic of all purely functional data structures.