I use Spark 2.1.

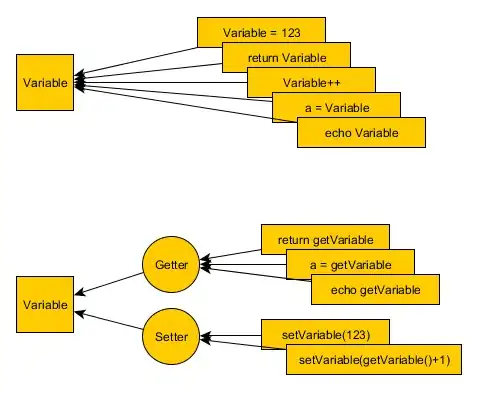

input csv file contains unicode characters like shown below

While parsing this csv file, the output is shown like below

I use MS Excel 2010 to view files.

The Java code used is

@Test

public void TestCSV() throws IOException {

String inputPath = "/user/jpattnaik/1945/unicode.csv";

String outputPath = "file:\\C:\\Users\\jpattnaik\\ubuntu-bkp\\backup\\bug-fixing\\1945\\output-csv";

getSparkSession()

.read()

.option("inferSchema", "true")

.option("header", "true")

.option("encoding", "UTF-8")

.csv(inputPath)

.write()

.option("header", "true")

.option("encoding", "UTF-8")

.mode(SaveMode.Overwrite)

.csv(outputPath);

}

How can I get the output same as input?