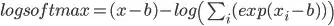

I know how to make softmax stable by adding to element -max _i x_i. This avoids overflow and underflow. Now, taking log of this can cause underflow. log softmax(x) can evaluate to zero, leading to -infinity.

I am not sure how to fix it. I know this is a common problem. I read several answers on it, which I didn't understand. But I am still confused on how to solve this problem.

PS: If you provide a simple example, it would be awesome.

, this new equation has both overflow and underflow stability conditions.

, this new equation has both overflow and underflow stability conditions.