definition

factorize: Map each unique object into a unique integer. Typically, the range of integers mapped to is from zero to the n - 1 where n is the number of unique objects. Two variations are typical as well. Type 1 is where the numbering occurs in the order in which unique objects are identified. Type 2 is where unique objects are first sorted and then the same process as in Type 1 is applied.

The Setup

Consider the list of tuples tups

tups = [(1, 2), ('a', 'b'), (3, 4), ('c', 5), (6, 'd'), ('a', 'b'), (3, 4)]

I want to factorize this into

[0, 1, 2, 3, 4, 1, 2]

I know there are many ways to do this. However, I want to do this as efficiently as possible.

What I've tried

pandas.factorize and get an error...

pd.factorize(tups)[0]

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-84-c84947ac948c> in <module>()

----> 1 pd.factorize(tups)[0]

//anaconda/envs/3.6/lib/python3.6/site-packages/pandas/core/algorithms.py in factorize(values, sort, order, na_sentinel, size_hint)

553 uniques = vec_klass()

554 check_nulls = not is_integer_dtype(original)

--> 555 labels = table.get_labels(values, uniques, 0, na_sentinel, check_nulls)

556

557 labels = _ensure_platform_int(labels)

pandas/_libs/hashtable_class_helper.pxi in pandas._libs.hashtable.PyObjectHashTable.get_labels (pandas/_libs/hashtable.c:21804)()

ValueError: Buffer has wrong number of dimensions (expected 1, got 2)

Or numpy.unique and get incorrect result...

np.unique(tups, return_inverse=1)[1]

array([0, 1, 6, 7, 2, 3, 8, 4, 5, 9, 6, 7, 2, 3])

I could use either of these on the hashes of the tuples

pd.factorize([hash(t) for t in tups])[0]

array([0, 1, 2, 3, 4, 1, 2])

Yay! That's what I wanted... so what's the problem?

First Problem

Look at the performance drop from this technique

lst = [10, 7, 4, 33, 1005, 7, 4]

%timeit pd.factorize(lst * 1000)[0]

1000 loops, best of 3: 506 µs per loop

%timeit pd.factorize([hash(i) for i in lst * 1000])[0]

1000 loops, best of 3: 937 µs per loop

Second Problem

Hashing is not guaranteed unique!

Question

What is a super fast way to factorize a list of tuples?

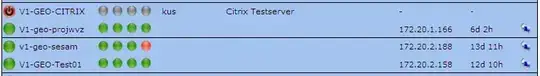

Timing

both axes are in log space

code

from itertools import count

def champ(tups):

d = {}

c = count()

return np.array(

[d[tup] if tup in d else d.setdefault(tup, next(c)) for tup in tups]

)

def root(tups):

return pd.Series(tups).factorize()[0]

def iobe(tups):

return np.unique(tups, return_inverse=True, axis=0)[1]

def get_row_view(a):

void_dt = np.dtype((np.void, a.dtype.itemsize * np.prod(a.shape[1:])))

a = np.ascontiguousarray(a)

return a.reshape(a.shape[0], -1).view(void_dt).ravel()

def diva(tups):

return np.unique(get_row_view(np.array(tups)), return_inverse=1)[1]

def gdib(tups):

return pd.factorize([str(t) for t in tups])[0]

from string import ascii_letters

def tups_creator_1(size, len_of_str=3, num_ints_to_choose_from=1000, seed=None):

c = len_of_str

n = num_ints_to_choose_from

np.random.seed(seed)

d = pd.DataFrame(np.random.choice(list(ascii_letters), (size, c))).sum(1).tolist()

i = np.random.randint(n, size=size)

return list(zip(d, i))

results = pd.DataFrame(

index=pd.Index([100, 1000, 5000, 10000, 20000, 30000, 40000, 50000], name='Size'),

columns=pd.Index('champ root iobe diva gdib'.split(), name='Method')

)

for i in results.index:

tups = tups_creator_1(i, max(1, int(np.log10(i))), max(10, i // 10))

for j in results.columns:

stmt = '{}(tups)'.format(j)

setup = 'from __main__ import {}, tups'.format(j)

results.set_value(i, j, timeit(stmt, setup, number=100) / 100)

results.plot(title='Avg Seconds', logx=True, logy=True)