Your memory is released but it is not so easy to see. There is a lack of tools (except Windbg with SOS) to show the currently allocated memory minus dead objects. Windbg has for this the !DumpHeap -live option to display only live objects.

I have tried the fiddle from AndyJ https://dotnetfiddle.net/wOtjw1

First I needed to create a memory dump with DataTable to have a stable baseline. MemAnalyzer https://github.com/Alois-xx/MemAnalyzer is the right tool for that.

MemAnalyzer.exe -procdump -ma DataTableMemoryLeak.exe DataTable.dmp

This expects procdump from SysInternals in your path.

Now you can run the program with the queue implementation and compare the allocation metrics on the managed heap:

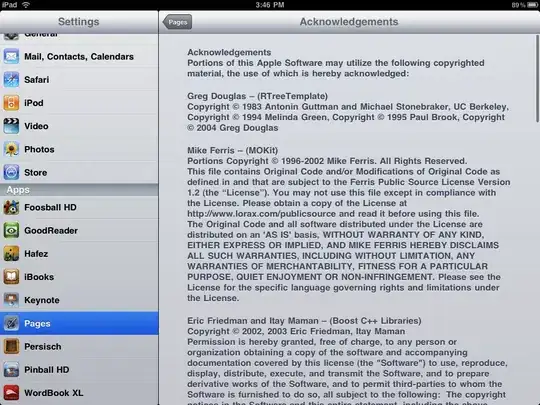

C>MemAnalyzer.exe -f DataTable.dmp -pid2 20792 -dtn 3

Delta(Bytes) Delta(Instances) Instances Instances2 Allocated(Bytes) Allocated2(Bytes) AvgSize(Bytes) AvgSize2(Bytes) Type

-176,624 -10,008 10,014 6 194,232 17,608 19 2934 System.Object[]

-680,000 -10,000 10,000 0 680,000 0 68 System.Data.DataRow

-7,514 -88 20,273 20,185 749,040 741,526 36 36 System.String

-918,294 -20,392 60,734 40,342 1,932,650 1,014,356 Managed Heap(Allocated)!

-917,472 0 0 0 1,954,980 1,037,508 Managed Heap(TotalSize)

This shows that we have 917KB more memory allocated with the DataTable approach and that 10K DataRow instances are still floating around on the managed heap. But are these numbers correct?

No.

Because most objects are already dead but no full GC did happen before we did take a memory dump these objects are still reported as alive. The fix is to tell MemAnalyzer to consider only rooted (live) objects like Windbg does it with the -live option:

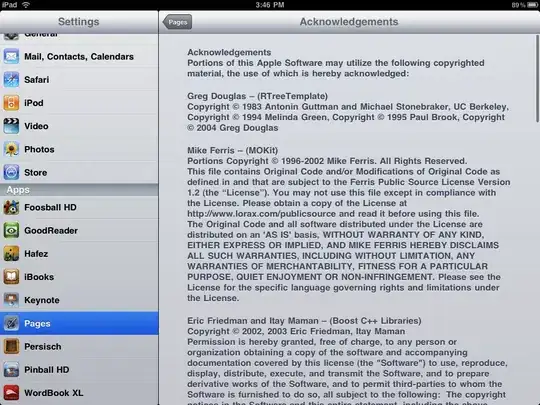

C>MemAnalyzer.exe -f DataTable.dmp -pid2 20792 -dts 5 -live

Delta(Bytes) Delta(Instances) Instances Instances2 Allocated(Bytes) Allocated2(Bytes) AvgSize(Bytes) AvgSize2(Bytes) Type

-68,000 -1,000 1,000 0 68,000 0 68 System.Data.DataRow

-36,960 -8 8 0 36,960 0 4620 System.Data.RBTree+Node<System.Data.DataRow>[]

-16,564 -5 10 5 34,140 17,576 3414 3515 System.Object[]

-4,120 -2 2 0 4,120 0 2060 System.Data.DataRow[]

-4,104 -1 19 18 4,716 612 248 34 System.String[]

-141,056 -1,285 1,576 291 169,898 28,842 Managed Heap(Allocated)!

-917,472 0 0 0 1,954,980 1,037,508 Managed Heap(TotalSize)

The DataTable approach still needs 141,056 bytes more memory because of the extra DataRow, object[] and System.Data.RBTree+Node[] instances. Measuring only the Working set is not enough because the managed heap is lazy deallocated. The GC can keep large amounts of memory if it thinks that the next memory spike is not far away. Measuring committed memory is therefore a nearly meaningless metric except if your (very low hanging) goal is to fix only memory leaks of GB in size.

The correct way to measure things is to measure the sum of

- Unmanaged Heap

- Allocated Managed Heap

- Memory Mapped Files

- Page File baked Memory Mapped File (Shareable Memory)

- Private Bytes

This is actually exactly what MemAnalyzer does with the -vmmap switch which expexct vmmap from Sysinternals in its path.

MemAnalyzer -pid ddd -vmmap

This way you can also track unmanaged memory leaks or file mapping leaks as well. The return value of MemAnalyzer is the total allocated memory in KB.

- If -vmmap is used it will report the sum of the above points.

- If vmmap is not present it will only report the allocated managed heap.

- If -live is added then only rooted managed objects are reported.

I did write the tool because there are no tools out there to my knowledge which make it easy to look at memory leaks in a holistic way. I always want to know if I leak memory regardless if it is managed, unmanaged or something else.

By writing the diff output to a CSV file you can create easily Pivot diff charts like the one above.

MemAnalyzer.exe -f DataTable.dmp -pid2 20792 -live -o ExcelDiff.csv

That should give you some ideas how to track allocation metrics in a much more accurate way.