N.B: there's a major edit at the bottom of the question - check it out

Question

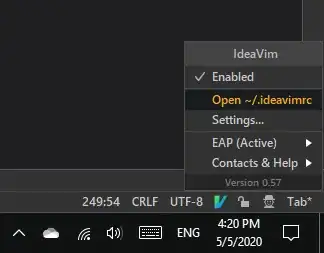

Say I have a set of points:

I want to find the point with the most points surrounding it, within radius  (ie a circle) or within

(ie a circle) or within  (ie a square) of the point for 2 dimensions. I'll refer to it as the densest point function.

(ie a square) of the point for 2 dimensions. I'll refer to it as the densest point function.

For the diagrams in this question, I'll represent the surrounding region as circles. In the image above, the middle point's surrounding region is shown in green. This middle point has the most surrounding points of all the points within radius  and would be returned by the densest point function.

and would be returned by the densest point function.

What I've tried

A viable way to solve this problem would be to use a range searching solution; this answer explains further and that it has " worst-case time". Using this, I could get the number of points surrounding each point and choose the point with largest surrounding point count.

worst-case time". Using this, I could get the number of points surrounding each point and choose the point with largest surrounding point count.

However, if the points were extremely densely packed (in the order of a million), as such:

then each of these million points ( ) would need to have a range search performed. The worst-case time

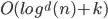

) would need to have a range search performed. The worst-case time  , where

, where  is the number of points returned in the range, is true for the following point tree types:

is the number of points returned in the range, is true for the following point tree types:

- kd-trees of two dimensions (which are actually slightly worse, at

),

), - 2d-range trees,

- Quadtrees, which have a worst-case time of

So, for a group of  points within radius

points within radius  of all points within the group, it gives complexity of

of all points within the group, it gives complexity of  for each point. This yields over a trillion operations!

for each point. This yields over a trillion operations!

Any ideas on a more efficient, precise way of achieving this, so that I could find the point with the most surrounding points for a group of points, and in a reasonable time (preferably  or less)?

or less)?

EDIT

Turns out that the method above is correct! I just need help implementing it.

(Semi-)Solution

If I use a 2d-range tree:

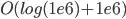

- A range reporting query costs

, for

, for  returned points,

returned points, - For a range tree with fractional cascading (also known as layered range trees) the complexity is

,

, - For 2 dimensions, that is

,

, - Furthermore, if I perform a range counting query (i.e., I do not report each point), then it costs

.

.

I'd perform this on every point - yielding the  complexity I desired!

complexity I desired!

Problem

However, I cannot figure out how to write the code for a counting query for a 2d layered range tree.

I've found a great resource (from page 113 onwards) about range trees, including 2d-range tree psuedocode. But I can't figure out how to introduce fractional cascading, nor how to correctly implement the counting query so that it is of O(log n) complexity.

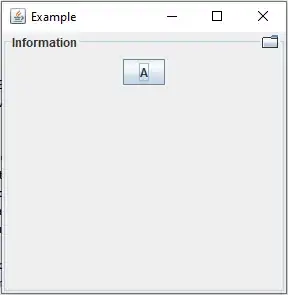

I've also found two range tree implementations here and here in Java, and one in C++ here, although I'm not sure this uses fractional cascading as it states above the countInRange method that

It returns the number of such points in worst case * O(log(n)^d) time. It can also return the points that are in the rectangle in worst case * O(log(n)^d + k) time where k is the number of points that lie in the rectangle.

which suggests to me it does not apply fractional cascading.

Refined question

To answer the question above therefore, all I need to know is if there are any libraries with 2d-range trees with fractional cascading that have a range counting query of complexity  so I don't go reinventing any wheels, or can you help me to write/modify the resources above to perform a query of that complexity?

so I don't go reinventing any wheels, or can you help me to write/modify the resources above to perform a query of that complexity?

Also not complaining if you can provide me with any other methods to achieve a range counting query of 2d points in  in any other way!

in any other way!