I have a java application that needs to load 300K record from a MySQL/Maria database in order to import them to a neo4j embedded db. To get all the required fields I need to join 4 tables. Each of them has nearly 300k records which match with 1:1 relationship with the others.

This is the code:

String query = ""

+ "SELECT "

+ " a.field1, "

+ " a.field2, "

+ " a.field3, "

+ " f.field4, "

+ " a.field5, "

+ " a.field6, "

+ " a.field7, "

+ " a.field8, "

+ " a.field9, "

+ " a.field10, "

+ " b.field11, "

+ " b.field12, "

+ " b.field13, "

+ " l.field14, "

+ " l.field15, "

+ " a.field16 "

+ "FROM table1 a "

+ "LEFT OUTER JOIN table2 f ON f.pkTable2 = a.fkTable2 "

+ "LEFT OUTER JOIN table3 b ON b.pkTable3 = a.fkTable3 "

+ "LEFT OUTER JOIN table4 l ON l.pk1Table4 = a.fk1Table4 AND l.pk2Table4 = a.fk2Table4 ";

try (

Connection connection = ds.getConnection();

PreparedStatement statement = connection.prepareStatement(query);

ResultSet rs = statement.executeQuery();

) {

Transaction tx = graphDB.beginTx(); // open neo4j transaction

int count = 0;

int count = 0;

rs.setFetchSize(10000);

while(rs.next()) {

String field1 = rs.getString("field1");

String field2 = rs.getString("field2");

String field3 = rs.getString("field3");

String field4 = rs.getString("field4");

String field5 = rs.getString("field5");

String field6 = rs.getString("field6");

String field7 = rs.getString("field7");

String field8 = rs.getString("field8");

String field9 = rs.getString("field9");

String field10 = rs.getString("field10"); // <-- error comes here

String field11 = rs.getString("field11");

String field12 = rs.getString("field12");

String field13 = rs.getString("field13");

String field14 = rs.getString("field14");

String field15 = rs.getBigDecimal("field15");

String field16 = rs.getBigDecimal("field16");

// process data - insert/update/delete in neo4j embedded DB

if("D".equals(field16)) { // record deleted in mysql db - delete from neo4j too

Map<String, Object> params = new HashMap<String, Object>();

params.put("field1", field1);

graphDB.execute(" MATCH (p:NODELABEL {field1:{field1}}) OPTIONAL MATCH (p)-[r]-() DELETE r,p", params);

} else {

Node node;

if("M".equals(field16)) { // record modified, load the existing node and edit it

node = graphDB.findNode(Labels.NODELABEL, "field1", field1);

} else { // new record, create node from scratch

node = graphDB.createNode(Labels.NODELABEL);

}

node.setProperty("field1", field1);

node.setProperty("field2", field2);

node.setProperty("field3", field3);

node.setProperty("field4", field4);

node.setProperty("field5", field5);

node.setProperty("field6", field6);

node.setProperty("field7", field7);

node.setProperty("field8", field8);

node.setProperty("field9", field9);

node.setProperty("field10", field10);

node.setProperty("field11", field11);

node.setProperty("field12", field12);

node.setProperty("field13", field13);

node.setProperty("field14", field14);

node.setProperty("field15", field15);

}

count++;

if(count % 10000 == 0) {

LOG.debug("Processed " + count + " records.");

tx.success(); // commit

tx.close(); // close neo4j transaction (should free the memory)

tx = graphDB.beginTx(); // reopen the transaction

}

}

// commit remaining records and close the last transaction

tx.success();

tx.close();

} catch (SQLException ex) {

// LOG exception

}

Everything goes fine but the import stops at 300k, waits approximately 5 seconds and throws an OutOfMemoryException:

java.lang.OutOfMemoryError: GC overhead limit exceeded

at com.mysql.cj.core.util.StringUtils.toString(StringUtils.java:1665)

at com.mysql.cj.core.io.StringValueFactory.createFromBytes(StringValueFactory.java:93)

at com.mysql.cj.core.io.StringValueFactory.createFromBytes(StringValueFactory.java:36)

at com.mysql.cj.core.io.MysqlTextValueDecoder.decodeByteArray(MysqlTextValueDecoder.java:232)

at com.mysql.cj.mysqla.result.AbstractResultsetRow.decodeAndCreateReturnValue(AbstractResultsetRow.java:124)

at com.mysql.cj.mysqla.result.AbstractResultsetRow.getValueFromBytes(AbstractResultsetRow.java:225)

at com.mysql.cj.mysqla.result.ByteArrayRow.getValue(ByteArrayRow.java:84)

at com.mysql.cj.jdbc.result.ResultSetImpl.getString(ResultSetImpl.java:880)

at com.mysql.cj.jdbc.result.ResultSetImpl.getString(ResultSetImpl.java:892)

at org.apache.tomcat.dbcp.dbcp2.DelegatingResultSet.getString(DelegatingResultSet.java:266)

at org.apache.tomcat.dbcp.dbcp2.DelegatingResultSet.getString(DelegatingResultSet.java:266)

at com.js.Importer.importData(Importer.java:99)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

This exception came up when I added outer join for table3 and table4. Before these new joins there were no errors.

I tried re-executing the code monitoring resources usage on my pc, turns out that the app takes up to 2GB RAM and 100% CPU while processing data. When it reaches 2GB RAM it goes out of memory.

I've read this answer. In the comments section you can find:

Tim: Would it be correct to summarise your answer as follows: "It's just like an 'Out of Java Heap space' error. Give it more memory with -Xmx." ?

OP: @Tim: No, that wouldn't be correct. While giving it more memory could reduce the problem, you should also look at your code and see why it produces that amount of garbage and why your code skims just below the "out of memory" mark. It's often a sign of broken code.

So I could also just give the app higher RAM, but this seems to be sort of a workaround, so I'd like to solve the issue instead.

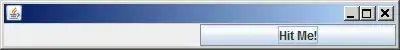

I also tried profiling the app with VisualVM and this was the result:

It seems that neo4j is keeping all nodes in memory even if I process 10K nodes at time to avoid memory overhead.

How to stop it from doing such thing?

How can I solve the memory issue?