This is based on what I've gathered myself.

The HTTP header Keep-Alive: timeout=5, max=1000 is just a header sent with HTTP requests. See it as a way to communicate between two hosts (client and server). The host says 'hey keep the connection alive please'. This is automatic for modern browsers and servers might implement it or not. The keepAlive: true of the agent is as the documentation says

Not to be confused with the keep-alive value of the Connection header.

What that means is that keepAlive: false != Connection: close. It doesn't really have anything to do with the header. The agent will take care of things at the TCP level with sockets and such on the HTTP client.

keepAlive boolean Keep sockets around even when there are no outstanding requests, so they can be used for future requests without having to reestablish a TCP connection

As soon as you use an agent for your HTTP client, the Connection: Keep-Alive will be used. Unless keepAlive is set to false and maxSockets to Infinity.

const options = {

port: 3000,

agent: new http.Agent({

keepAlive: false ,

maxSockets: Infinity,

})

};//----> Connection: close

What exactly is an agent?

An Agent is responsible for managing connection persistence and reuse for HTTP clients. It maintains a queue of pending requests for a given host and port, reusing a single socket connection for each until the queue is empty, at which time the socket is either destroyed or put into a pool where it is kept to be used again for requests to the same host and port. Whether it is destroyed or pooled depends on the keepAlive option.

Pooled connections have TCP Keep-Alive enabled for them, but servers may still close idle connections, in which case they will be removed from the pool and a new connection will be made when a new HTTP request is made for that host and port. Servers may also refuse to allow multiple requests over the same connection, in which case the connection will have to be remade for every request and cannot be pooled. The Agent will still make the requests to that server, but each one will occur over a new connection.

Regarding timeout and max, as far as I know, these are set (automatically?) when adding config for Apache

#

# KeepAlive: Whether or not to allow persistent connections (more than

# one request per connection). Set to "Off" to deactivate.

#

KeepAlive On

#

# MaxKeepAliveRequests: The maximum number of requests to allow

# during a persistent connection. Set to 0 to allow an unlimited amount.

# We recommend you leave this number high, for maximum performance.

#

MaxKeepAliveRequests 100

#

# KeepAliveTimeout: Number of seconds to wait for the next request from the

# same client on the same connection.

#

KeepAliveTimeout 5

which gives

Connection:Keep-Alive

Keep-Alive:timeout=5, max=100

But these are irrelevant for NodeJS? I'll let more experimented people answer this. Anyway, the agent won't set these and won't modify Connection: Keep-Alive unless setting keepAlive to false and maxSockets to Infinity as said above.

However, for the agent config to have any meaning, Connection must be set to Keep-Alive.

Okay, now for a little experiment to see the agent at work!

I've set up a client for testing (since axios use http.agent for the agent anyway, I just use http).

const http = require('http');

const options = {

port: 3000,

agent: new http.Agent({

keepAlive: true,

maxSockets: 2,

}),

// headers: {

// 'Connection': 'close'

// }

};

var i = 0;

function request() {

console.log(`${++i} - making a request`);

const req = http.request(options, (res) => {

console.log(`STATUS: ${res.statusCode}`);

console.log(`HEADERS: ${JSON.stringify(res.headers)}`);

res.setEncoding('utf8');

res.on('data', (chunk) => {

console.log(`BODY: ${chunk}`);

});

res.on('end', () => {

console.log('No more data in response.');

});

});

req.on('error', (e) => {

console.error(`problem with request: ${e.message}`);

});

req.end();

}

setInterval(function(){ request(); }, 3000); // send a request every 3 seconds

And the server is an express application (I'll skip the details)

server.on('connection', function(socket) {

socket.id = shortid.generate();

//socket.setTimeout(500)

console.log("A new connection was made by a client." + ` SOCKET ${ socket.id }`);

socket.on('end', function() {

console.log(`SOCKET ${ socket.id } END: other end of the socket sends a FIN packet`);

});

socket.on('timeout', function() {

console.log(`SOCKET ${ socket.id } TIMEOUT`);

});

socket.on('error', function(error) {

console.log(`SOCKET ${ socket.id } ERROR: ` + JSON.stringify(error));

});

socket.on('close', function(had_error) {

console.log(`SOCKET ${ socket.id } CLOSED. IT WAS ERROR: ` + had_error);

});

});

To make you see that keepAlive: false != Connection: close, let set keepAlive to false and see what happens server-side.

agent: new http.Agent({

keepAlive: false,

maxSockets: 20

})

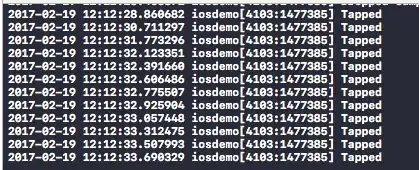

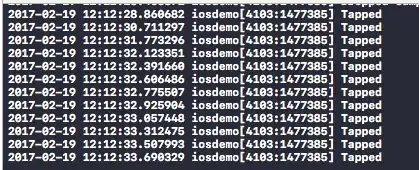

Server

Client

As you can see, I've not set maxSockets to Infinity so even though keepAlive in the agent was set to false, the Connection header was set to Keep-Alive. However, each time a request was sent to the server, the socket on the server was immediately closed after each request. Let's see what happens when we set keepAlive to true.

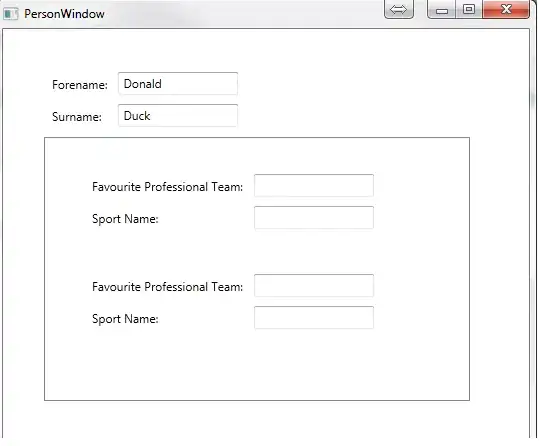

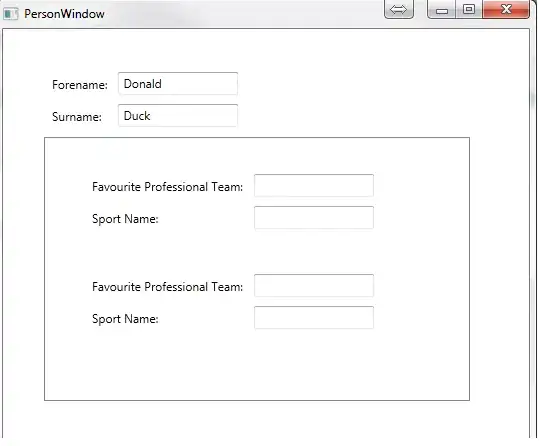

Server

Client

This time around, only one socket have been used. There was a persistent connection between the client and the server that persisted beyond a single request.

One thing I've learned, thanks to this great article is that on Firefox, you can have as many as 6 concurrent persistent connections at a time. And you can reproduce this with the agent by setting maxSockets to 6. For testing purposes, I'll set this to 2. And also, I won't return anything from the server so the connection will be left hanging.

agent: new http.Agent({

keepAlive: true,

maxSockets: 2,

}),

//res.send('response from the server');

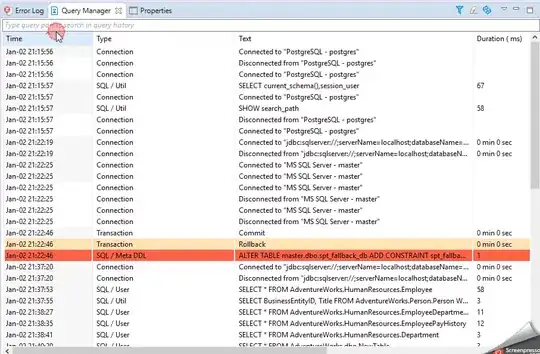

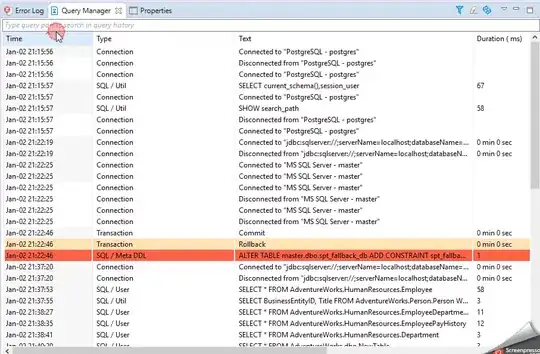

Server

Client

The client keeps sending requests but only two have been received by the server. Yet after two minutes, see http_server_timeout

The number of milliseconds of inactivity before a socket is presumed to have timed out.

two new requests are accepted. Actually, the client has queued the subsequent requests and once the server freed the sockets, the client was able to send two new requests from the queue.

So, I hope this helps.