It won't work for local[*] as it's too late to set up the single JVM for the driver and the single executor (that's again the driver).

--master local[*] --conf -Dlogback.configurationFile=C:\Users\A661758\Desktop\logback.xml

Not to mention that setting such parameters is through spark.driver.extraJavaOptions or spark.executor.extraJavaOptions as described in Runtime Environment:

spark.driver.extraJavaOptions A string of extra JVM options to pass to the driver. For instance, GC settings or other logging.

spark.executor.extraJavaOptions A string of extra JVM options to pass to executors. For instance, GC settings or other logging.

When you read the documentation, you should find the following (highlighting mine):

Note: In client mode, this config must not be set through the SparkConf directly in your application, because the driver JVM has already started at that point. Instead, please set this through the --driver-java-options command line option or in your default properties file.

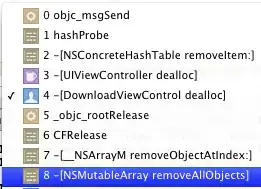

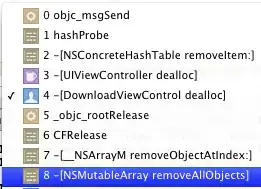

When you execute spark-submit --help you should see the following:

--driver-java-options Extra Java options to pass to the driver.

Use --driver-java-options as follows:

./bin/spark-shell --driver-java-options -Daaa=bbb

and check out web UI's Environment tab if the option is set.