Feature Extraction

To generalise @bakkal's suggestion of using edges, one can extract many types of image features. These include edges, corners, blobs, ridges, etc.. There is actually a page on mathworks with a few examples, including number recognition using HOG features (histogram of oriented gradients).

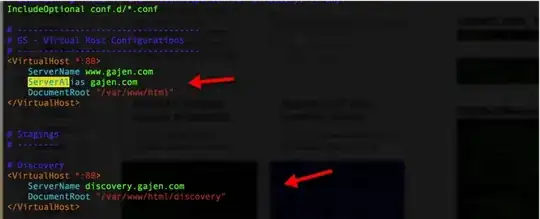

Such techniques should work for more complex images too, because edges are not always the best features. Extracting HOG features from the two of your images using matlab's extractHOGFeatures:

I believe you can use vlfeat for HOG features if you have Octave instead.

Another important thing to keep in mind is that you want all images to have the same size. I have resized both of your images to be 500x500, but this is arbitrary.

The code to generate the image above

close all; clear; clc;

% reading in

img1 = rgb2gray(imread('img1.png'));

img2 = rgb2gray(imread('img2.png'));

img_size = [500 500]; %

% all images should have the same size

img1_resized = imresize(img1, img_size);

img2_resized = imresize(img2, img_size);

% extracting features

[hog1, vis1] = extractHOGFeatures(img1_resized);

[hog2, vis2] = extractHOGFeatures(img2_resized);

% plotting

figure(1);

subplot(1, 2, 1);

plot(vis1);

subplot(1, 2, 2);

plot(vis2);

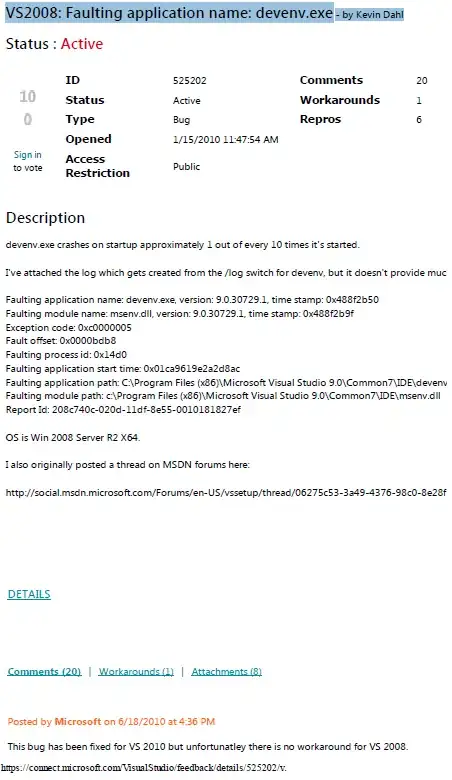

You do not have to be limited to HOG features. One can also quickly try SURF features

Again, the color inversion does not matter because the features match. But you can see that HOG features are probably a better choice here, because the plotted 20 points/blobs do not really represent number 6 that well.. The code to get the above in matlab.

% extracting SURF features

points1 = detectSURFFeatures(img1_resized);

points2 = detectSURFFeatures(img2_resized);

% plotting SURF Features

figure(2);

subplot(1, 2, 1);

imshow(img1_resized);

hold on;

plot(points1.selectStrongest(20));

hold off;

subplot(1, 2, 2);

imshow(img2_resized);

hold on;

plot(points2.selectStrongest(20));

hold off;

To summarise, depending on the problem, you can choose different types of features. Most of the time choosing raw pixel values is not good enough as you saw from your own experience, unless you have a very large dataset encapsulating all possible cases.