It's easier to look at a schematic drawing of an LSTM cell:

So I guess you have already read in the other question: sigmoid/tanh functions have a fixed output range. For sigmoid, this is (0,1), while for tanh, it's (-1,1). Both have an upper and lower value.

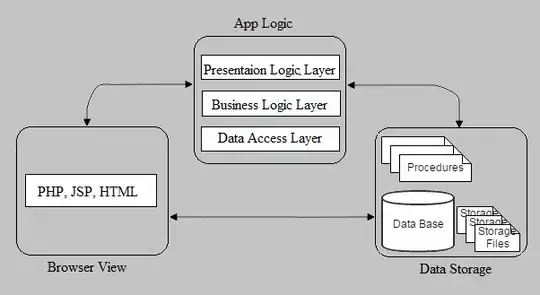

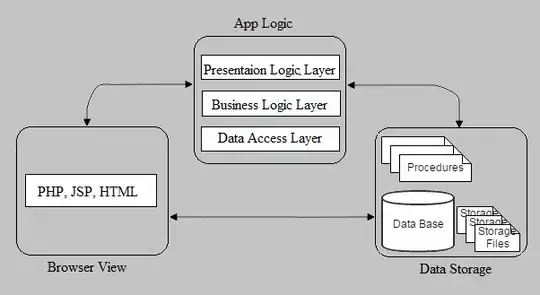

As you see in the above picture, there are 3 gates - but contrary to what you might believe, these gates aren't actually connected in a feedforward manner to any other neuron in the cell.

The gates are connected to connections instead of neurons. Weird huh! Let me explain. x_t is projecting a connection to c_t. They are connected with a connection, that has a certain multiplier (aka weight). So the input from x_t to c_t becomes x_t * weight.

But that's not all. The gate adds another multiplier to that calculation. So instead of x_t * weight, it becomes x_t * weight * gate. Which for the input gate, is equivalent to x_t * weight * i_t.

Basically, the activation value of i_t gets multiplied with the value from x_t. So if i_t has a high value, then the value coming from x_t has a higher value to c_t. If i_t has a low value, then it could potentionally disable the input from x_t (if i_t=0).