We need to map each coordinate in the rotated image to a corresponding coordinate in the original.

Assuming a rotation about (a, b) and counter-clockwise rotation by θ degrees:

Where (x, y) are in the original image and (x', y') the rotated one.

Simple technique: nearest neighbor

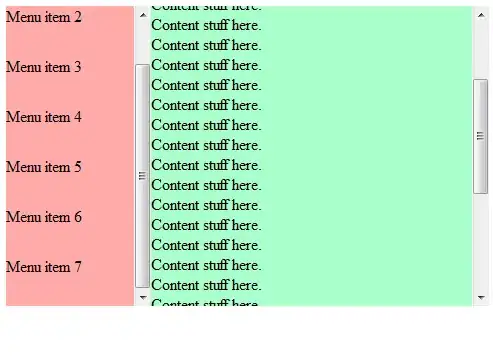

When sampling the pixel data using the calculated coordinates, we could simply round them to the nearest integers (i.e. nearest pixel). This gives the following result:

At first glance this seems good enough, but webpage re-scaling + image compression blurs the edges. A zoomed-in view reveals that the resulting image has nasty jagged edges (aliasing):

Filtering: bilinear approximation

To improve on this, we need to realize that the rotated "pixel" area actually covers multiple pixels in the original image:

We can then calculate the average pixel color as the sum of contributions from each covered original pixel weighted by their relative areas. Let's call this "anisotropic" filtering for convenience (not the exact meaning of this term, but the closest I can think of).

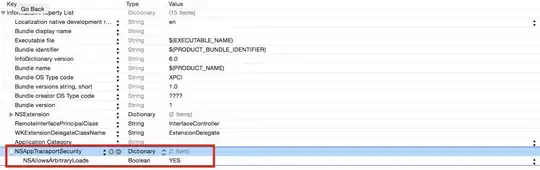

However the areas will be quite difficult to calculate exactly. So we can "cheat" a little by applying an approximation, where the rotated sample area (in red) is aligned with the gridlines:

This makes the areas a lot easier to calculate. We shall use the first-order linear average method - "bilinear" filtering.

C# code sample:

Transform trn = new Transform(a, cx, cy); // inverse rotation transform to original image space

for (int y = 0; y < h; y++)

{

for (int x = 0; x < w; x++)

{

Vector v = trn.Get((float)x, (float)y);

int i = (int)Math.Floor(v.x),

j = (int)Math.Floor(v.y);

float s = v.x - (float)i,

t = v.y - (float)j;

RGB c = RGB.Black, u; float z, r = 0.0f;

if ((u = src.getPixel(i, j)).Valid)

{

z = (1 - s) * (1 - t); // area of overlap in top-left covered pixel

c += u * z; r += z; // add to total color and total area

}

if ((u = src.getPixel(i + 1, j)).Valid)

{

z = s * (1 - t);

c += u * z; r += z;

}

if ((u = src.getPixel(i, j + 1)).Valid)

{

z = (1 - s) * t;

c += u * z; r += z;

}

if ((u = src.getPixel(i + 1, j + 1)).Valid)

{

z = s * t;

c += u * z; r += z;

}

if (r > 0.0f)

dst.setPixel(x, y, c * (1.0f / r)); // normalize the sum by total area

}

}

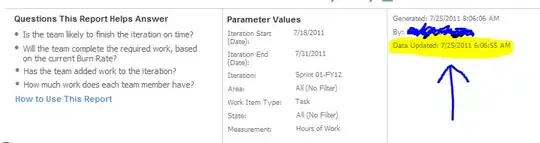

Zoomed-in result:

Much better than the naive nearest-neighbor method!

OCD Alert!

Just out of curiosity, I implemented the full "anisotropic" method mentioned before. Took way longer than it should, and not exactly efficient (using Sutherland-Hodgman clipping to calculate the intersection region between the rotated pixel area and each grid pixel). Computational time went through the roof - about 7 seconds compared to less than 0.5 for the bilinear method. The end result? Not worth the effort at all!

(L: bilinear, R: anisotropic)

Code (my implementation is trash, don't bother to read it, really):

private static Vector[][] clipboxes = new Vector[][] {

new Vector[] { new Vector(-1f,-1f), new Vector(0f,-1f), new Vector(0f,0f), new Vector(-1f,0f)},

new Vector[] { new Vector(0f,-1f), new Vector(1f,-1f), new Vector(1f,0f), new Vector(0f,0f)},

new Vector[] { new Vector(1f,-1f), new Vector(2f,-1f), new Vector(2f,0f), new Vector(1f,0f)},

new Vector[] { new Vector(-1f,0f), new Vector(0f,0f), new Vector(0f,1f), new Vector(-1f,1f)},

new Vector[] { new Vector(0f,0f), new Vector(1f,0f), new Vector(1f,1f), new Vector(0f,1f)},

new Vector[] { new Vector(1f,0f), new Vector(2f,0f), new Vector(2f,1f), new Vector(1f,1f)},

new Vector[] { new Vector(-1f,1f), new Vector(0f,1f), new Vector(0f,2f), new Vector(-1f,2f)},

new Vector[] { new Vector(0f,1f), new Vector(1f,1f), new Vector(1f,2f), new Vector(0f,2f)},

new Vector[] { new Vector(1f,1f), new Vector(2f,1f), new Vector(2f,2f), new Vector(1f,2f)}

};

private static bool inside(Vector a, Vector b, Vector c)

{

return ((c - b) ^ (a - b)) > 0f;

}

private static Vector intersect(Vector a, Vector b, Vector c, Vector d)

{

return (((c - d) * (a ^ b)) - ((a - b) * (c ^ d))) * (1.0f / ((a - b) ^ (c - d)));

}

private static float getArea(List<Vector> l)

{

if (l.Count == 0)

return 0f;

float sum = 0.0f;

Vector b = l.Last();

foreach (Vector c in l)

{

sum += b ^ c;

b = c;

}

return 0.5f * Math.Abs(sum);

}

private static float getOverlap(Vector[] clip, Vector[] box)

{

List<Vector> lO = box.ToList();

Vector lC = clip[clip.Length - 1];

foreach (Vector C in clip)

{

if (lO.Count == 0)

return 0.0f;

List<Vector> lI = lO;

Vector lB = lI.Last();

lO = new List<Vector>();

foreach (Vector B in lI)

{

if (inside(B, lC, C))

{

if (!inside(lB, lC, C))

lO.Add(intersect(lB, B, lC, C));

lO.Add(B);

}

else

if (inside(lB, lC, C))

lO.Add(intersect(lB, B, lC, C));

lB = B;

}

lC = C;

}

return getArea(lO);

}

// image processing code, as before

Transform trn = new Transform(a, cx, cy);

for (int y = 0; y < h; y++)

{

for (int x = 0; x < w; x++)

{

Vector p = trn.Get((float)x, (float)y);

int i = p.X, j = p.Y;

Vector d = new Vector(i, j);

List<Vector> r = new List<Vector>();

r.Add(p - d);

r.Add(trn.Get((float)(x+1), (float)y) - d);

r.Add(trn.Get((float)(x+1), (float)(y+1)) - d);

r.Add(trn.Get((float)x, (float)(y+1)) - d);

RGB c = RGB.Black;

float t = 0.0f;

for (int l = 0; l < 3; l++)

{

for (int m = 0; m < 3; m++)

{

float area = getOverlap(clipboxes[m * 3 + l], r.ToArray());

if (area > 0.0f)

{

RGB s = src.getPixel(i + l - 1, j + m - 1);

if (s.Valid)

{

c += s * area;

t += area;

}

}

}

}

if (t > 0.0f)

dst.setPixel(x, y, c * (1.0f / t));

}

}

There are more advanced techniques available, e.g. using Fourier transforms - see Dartmouth University's paper named High Quality Alias Free Image Rotation (directly available from their website). Also, instead of bilinear interpolation, we could have used a higher order e.g. bicubic, which would give even smoother results.