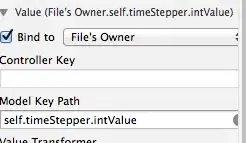

In Brazil a standard form filled (by hand) for every newborn in a hospital. This form is called "DNV" (after the Portuguese for "Born Alive Declaration"). There is also a form called "DO" (after the Portuguese for "Decease Declaration"). In my state the hospitals send close to a million of these forms to the agency where I work where we compute a database called "Vital Statistics". I'm investigating if it is possible to automate the work. Since commercial ICR solutions cost a lot of money nobody in charge believe it can be done internally so it is a grassroots project.

The top of the form is like this:

I got my hands into 100K PDF files sent from several hospitals and was able to classify them into one of the two types (DNV or DO) using a naive algorithm: first I locate the black rectangle that contains the type of the document (using cv2.findContours and a bit of heuristics) and apply a OCR (pytesseract.image_to_string). I've found 20k "decease declaration" (DO) and 80k "born alive declaration".

Using a similar algorithm I was able to OCR the number at the right of the black rectangle and link 55k form images with the corresponding record in a database filled by professional typists based on these documents.

Now I want to locate the date field (in red) in order to try a bit of machine learning for recognizing the digits - the field is highlighted below:

First I tried a "template matching" algorithm using this as the template:

This works well but only if the template and the form image are in the same scale and angle. The cv2.matchTemplate method is really sensitive about scale. I tried feature matching algorithms using SURF but I'm having a hard time getting it to work (feels like overkill).

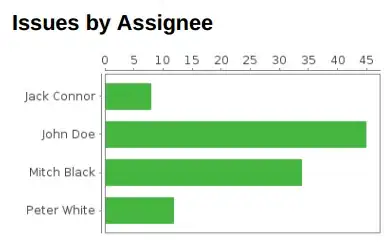

Since it is easy to locate the black rectangle in the left, I'm thinking some options in order to locate the digits:

normalizing the scale and angle based on the black rectangle and trying

cv2.matchTemplate.trying to locate the contour, simplify it using

cv2.approxPolyDPand guess the digits location.

My question is: any advice about how to attack the problem? What other algorithm can I use in order to locate this form field if the input is not normalized in terms of resolution/angle?

[update #1]

Given (x, y, w, h) as the position and size of the black rectangle in the left, I can narrow down the search with reasonable confidence.

Trying random samples, this formula gives me:

img.crop((x+w, y+h/3, x+h*3.05, y+2*h/3))

[update #2]

I just learned about erode and dilate, now they are my new best friends.

horizontal = edges.copy()

vertical = edges.copy()

kv = np.ones((25, 1), np.uint8)

kh = np.ones((1, 30), np.uint8)

horizontal = cv2.dilate(cv2.erode(horizontal, kh, iterations=2), kh, iterations=2)

vertical = cv2.dilate(cv2.erode(vertical, kv, iterations=2), kv, iterations=2)

grid = horizontal | vertical

plt.imshow(edges, 'gray')

plt.imshow(grid, 'gray')

BTW I don't have a clue about computer vision. Lets get back to google...