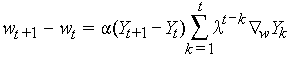

I have an image represented by a matrix of size n * n

I've created a transform matrix

M = cv2.getPerspectiveTransform(...)

And I can transform the image with the shape defined in M using

cv2.warpPerspective(image, M, image_shape)

According to this, I should be able to multiply the matrix with a point and get the new location of that point, after the transformation. I tried that:

point = [100, 100, 0]

x, y, z = M.dot(point)

But I got the wrong results. (In this case [112.5 12.5 0])

What am I doing wrong?

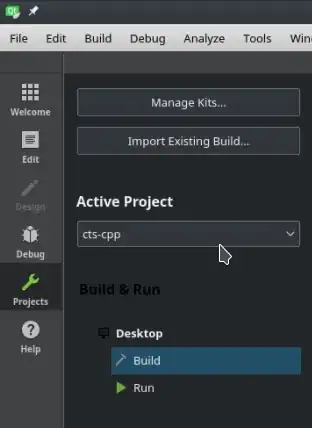

For more clarity, here's what I'm doing:

I have this image, with the lines and the square on different layers

I warp the lines and get this:

And now I want to get the coordinates for putting the square like this:

What I have is the warp matrix I used for the lines, and the coordinates of the square in the first picture

Note: one option is to create an image with a single dot, just warp it with the first method and find the non-zero cells in the new image. I'm looking for something more idiomatic than that, hopefully