We set up two identical HP Z840 Workstations with the following specs

- 2 x Xeon E5-2690 v4 @ 2.60GHz (Turbo Boost ON, HT OFF, total 28 logical CPUs)

- 32GB DDR4 2400 Memory, Quad-channel

and installed Windows 7 SP1 (x64) and Windows 10 Creators Update (x64) on each.

Then we ran a small memory benchmark (code below, built with VS2015 Update 3, 64-bit architecture) which performs memory allocation-fill-free simultaneously from multiple threads.

#include <Windows.h>

#include <vector>

#include <ppl.h>

unsigned __int64 ZQueryPerformanceCounter()

{

unsigned __int64 c;

::QueryPerformanceCounter((LARGE_INTEGER *)&c);

return c;

}

unsigned __int64 ZQueryPerformanceFrequency()

{

unsigned __int64 c;

::QueryPerformanceFrequency((LARGE_INTEGER *)&c);

return c;

}

class CZPerfCounter {

public:

CZPerfCounter() : m_st(ZQueryPerformanceCounter()) {};

void reset() { m_st = ZQueryPerformanceCounter(); };

unsigned __int64 elapsedCount() { return ZQueryPerformanceCounter() - m_st; };

unsigned long elapsedMS() { return (unsigned long)(elapsedCount() * 1000 / m_freq); };

unsigned long elapsedMicroSec() { return (unsigned long)(elapsedCount() * 1000 * 1000 / m_freq); };

static unsigned __int64 frequency() { return m_freq; };

private:

unsigned __int64 m_st;

static unsigned __int64 m_freq;

};

unsigned __int64 CZPerfCounter::m_freq = ZQueryPerformanceFrequency();

int main(int argc, char ** argv)

{

SYSTEM_INFO sysinfo;

GetSystemInfo(&sysinfo);

int ncpu = sysinfo.dwNumberOfProcessors;

if (argc == 2) {

ncpu = atoi(argv[1]);

}

{

printf("No of threads %d\n", ncpu);

try {

concurrency::Scheduler::ResetDefaultSchedulerPolicy();

int min_threads = 1;

int max_threads = ncpu;

concurrency::SchedulerPolicy policy

(2 // two entries of policy settings

, concurrency::MinConcurrency, min_threads

, concurrency::MaxConcurrency, max_threads

);

concurrency::Scheduler::SetDefaultSchedulerPolicy(policy);

}

catch (concurrency::default_scheduler_exists &) {

printf("Cannot set concurrency runtime scheduler policy (Default scheduler already exists).\n");

}

static int cnt = 100;

static int num_fills = 1;

CZPerfCounter pcTotal;

// malloc/free

printf("malloc/free\n");

{

CZPerfCounter pc;

for (int i = 1 * 1024 * 1024; i <= 8 * 1024 * 1024; i *= 2) {

concurrency::parallel_for(0, 50, [i](size_t x) {

std::vector<void *> ptrs;

ptrs.reserve(cnt);

for (int n = 0; n < cnt; n++) {

auto p = malloc(i);

ptrs.emplace_back(p);

}

for (int x = 0; x < num_fills; x++) {

for (auto p : ptrs) {

memset(p, num_fills, i);

}

}

for (auto p : ptrs) {

free(p);

}

});

printf("size %4d MB, elapsed %8.2f s, \n", i / (1024 * 1024), pc.elapsedMS() / 1000.0);

pc.reset();

}

}

printf("\n");

printf("Total %6.2f s\n", pcTotal.elapsedMS() / 1000.0);

}

return 0;

}

Surprisingly, the result is very bad in Windows 10 CU compared to Windows 7. I plotted the result below for 1MB chunk size and 8MB chunk size, varying the number of threads from 2,4,.., up to 28. While Windows 7 gave slightly worse performance when we increased the number of threads, Windows 10 gave much worse scalability.

We have tried to make sure all Windows update is applied, update drivers, tweak BIOS settings, without success. We also ran the same benchmark on several other hardware platforms, and all gave similar curve for Windows 10. So it seems to be a problem of Windows 10.

Does anyone have similar experience, or maybe know-how about this (maybe we missed something ?). This behavior has made our multithreaded application got significant performance hit.

*** EDITED

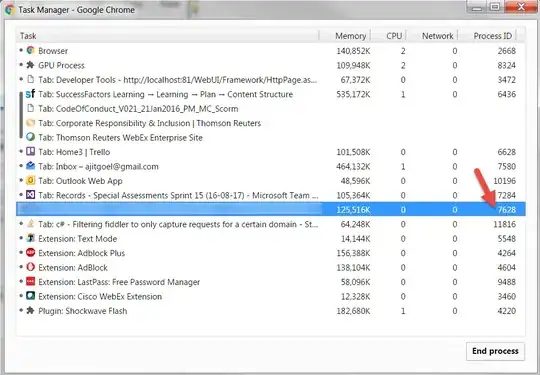

Using https://github.com/google/UIforETW (thanks to Bruce Dawson) to analyze the benchmark, we found that most of the time is spent inside kernels KiPageFault. Digging further down the call tree, all leads to ExpWaitForSpinLockExclusiveAndAcquire. Seems that the lock contention is causing this issue.

*** EDITED

Collected Server 2012 R2 data on the same hardware. Server 2012 R2 is also worse than Win7, but still a lot better than Win10 CU.

*** EDITED

It happens in Server 2016 as well. I added the tag windows-server-2016.

*** EDITED

Using info from @Ext3h, I modified the benchmark to use VirtualAlloc and VirtualLock. I can confirmed significant improvement compared to when VirtualLock is not used. Overall Win10 is still 30% to 40% slower than Win7 when both using VirtualAlloc and VirtualLock.