I would like to create a function to position a free-floating 2D raster image in space with the Irrlicht engine. The inspiration for this is the function rgl::show2d in the R package rgl. An example implementation in R can be found here.

The input data should be limited to the path to the image and a table with the four corner coordinates of the respective plot rectangle.

My first, pretty primitive and finally unsuccessful approach to realize this with irrlicht:

Create a cube:

ISceneNode * picturenode = scenemgr->addCubeSceneNode();

Flatten one side:

picturenode->setScale(vector3df(1, 0.001, 1));

Add image as texture:

picturenode->setMaterialTexture(0, driver->getTexture("path/to/image.png"));

Place flattened cube at the center position of the four corner coordinates. I just calculate the mean coordinates on all three axes with a small function position_calc().

vector3df position = position_calc(rcdf); picturenode->setPosition(position);

Determine the object rotation by calculating the normal of the plane defined by the four corner coordinates, normalizing the result and trying to somehow translate the resulting vector to rotation angles.

vector3df normal = normal_calc(rcdf);

vector3df angles = (normal.normalize()).getSphericalCoordinateAngles();

picturenode->setRotation(angles);

This solution doesn't produce the expected result. The rotation calculation is wrong. With this approach I'm also not able to scale the image correctly to it's corner coordinates.

How can I fix my workflow? Or is there a much better way to achieve this with Irrlicht that I'm not aware of?

Edit: Thanks to @spug I believe I'm almost there. I tried to implement his method 2, because quaternions are already available in Irrlicht. Here's what I came up with to calculate the rotation:

#include <Rcpp.h>

#include <irrlicht.h>

#include <math.h>

using namespace Rcpp;

core::vector3df rotation_calc(DataFrame rcdf) {

NumericVector x = rcdf["x"];

NumericVector y = rcdf["y"];

NumericVector z = rcdf["z"];

// Z-axis

core::vector3df zaxis(0, 0, 1);

// resulting image's normal

core::vector3df normal = normal_calc(rcdf);

// calculate the rotation from the original image's normal (i.e. the Z-axis)

// to the resulting image's normal => quaternion P.

core::quaternion p;

p.rotationFromTo(zaxis, normal);

// take the midpoint of AB from the diagram in method 1, and rotate it with

// the quaternion P => vector U.

core::vector3df MAB(0, 0.5, 0);

core::quaternion m(MAB.X, MAB.Y, MAB.Z, 0);

core::quaternion rot = p * m * p.makeInverse();

core::vector3df u(rot.X, rot.Y, rot.Z);

// calculate the rotation from U to the midpoint of DE => quaternion Q

core::vector3df MDE(

(x(0) + x(1)) / 2,

(y(0) + y(1)) / 2,

(z(0) + z(1)) / 2

);

core::quaternion q;

q.rotationFromTo(u, MDE);

// multiply in the order Q * P, and convert to Euler angles

core::quaternion f = q * p;

core::vector3df euler;

f.toEuler(euler);

// to degrees

core::vector3df degrees(

euler.X * (180.0 / M_PI),

euler.Y * (180.0 / M_PI),

euler.Z * (180.0 / M_PI)

);

Rcout << "degrees: " << degrees.X << ", " << degrees.Y << ", " << degrees.Z << std::endl;

return degrees;

}

The result is almost correct, but the rotation on one axis is wrong. Is there a way to fix this or is my implementation inherently flawed?

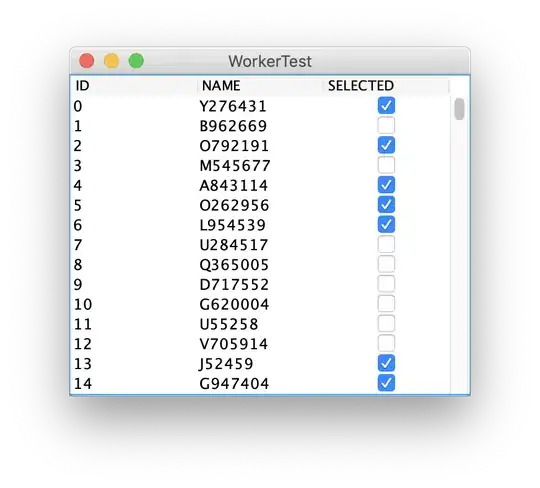

That's what the result looks like now. The points mark the expected corner points.