I've been trying to create a generalized Gradient Noise generator (which doesn't use the hash method to get gradients). The code is below:

class GradientNoise {

std::uint64_t m_seed;

std::uniform_int_distribution<std::uint8_t> distribution;

const std::array<glm::vec2, 4> vector_choice = {glm::vec2(1.0, 1.0), glm::vec2(-1.0, 1.0), glm::vec2(1.0, -1.0),

glm::vec2(-1.0, -1.0)};

public:

GradientNoise(uint64_t seed) {

m_seed = seed;

distribution = std::uniform_int_distribution<std::uint8_t>(0, 3);

}

// 0 -> 1

// just passes the value through, origionally was perlin noise activation

double nonLinearActivationFunction(double value) {

//return value * value * value * (value * (value * 6.0 - 15.0) + 10.0);

return value;

}

// 0 -> 1

//cosine interpolation

double interpolate(double a, double b, double t) {

double mu2 = (1 - cos(t * M_PI)) / 2;

return (a * (1 - mu2) + b * mu2);

}

double noise(double x, double y) {

std::mt19937_64 rng;

//first get the bottom left corner associated

// with these coordinates

int corner_x = std::floor(x);

int corner_y = std::floor(y);

// then get the respective distance from that corner

double dist_x = x - corner_x;

double dist_y = y - corner_y;

double corner_0_contrib; // bottom left

double corner_1_contrib; // top left

double corner_2_contrib; // top right

double corner_3_contrib; // bottom right

std::uint64_t s1 = ((std::uint64_t(corner_x) << 32) + std::uint64_t(corner_y) + m_seed);

std::uint64_t s2 = ((std::uint64_t(corner_x) << 32) + std::uint64_t(corner_y + 1) + m_seed);

std::uint64_t s3 = ((std::uint64_t(corner_x + 1) << 32) + std::uint64_t(corner_y + 1) + m_seed);

std::uint64_t s4 = ((std::uint64_t(corner_x + 1) << 32) + std::uint64_t(corner_y) + m_seed);

// each xy pair turns into distance vector from respective corner, corner zero is our starting corner (bottom

// left)

rng.seed(s1);

corner_0_contrib = glm::dot(vector_choice[distribution(rng)], {dist_x, dist_y});

rng.seed(s2);

corner_1_contrib = glm::dot(vector_choice[distribution(rng)], {dist_x, dist_y - 1});

rng.seed(s3);

corner_2_contrib = glm::dot(vector_choice[distribution(rng)], {dist_x - 1, dist_y - 1});

rng.seed(s4);

corner_3_contrib = glm::dot(vector_choice[distribution(rng)], {dist_x - 1, dist_y});

double u = nonLinearActivationFunction(dist_x);

double v = nonLinearActivationFunction(dist_y);

double x_bottom = interpolate(corner_0_contrib, corner_3_contrib, u);

double x_top = interpolate(corner_1_contrib, corner_2_contrib, u);

double total_xy = interpolate(x_bottom, x_top, v);

return total_xy;

}

};

I then generate an OpenGL texture to display with like this:

int width = 1024;

int height = 1024;

unsigned char *temp_texture = new unsigned char[width*height * 4];

double octaves[5] = {2,4,8,16,32};

for( int i = 0; i < height; i++){

for(int j = 0; j < width; j++){

double d_noise = 0;

d_noise += temp_1.noise(j/octaves[0], i/octaves[0]);

d_noise += temp_1.noise(j/octaves[1], i/octaves[1]);

d_noise += temp_1.noise(j/octaves[2], i/octaves[2]);

d_noise += temp_1.noise(j/octaves[3], i/octaves[3]);

d_noise += temp_1.noise(j/octaves[4], i/octaves[4]);

d_noise/=5;

uint8_t noise = static_cast<uint8_t>(((d_noise * 128.0) + 128.0));

temp_texture[j*4 + (i * width * 4) + 0] = (noise);

temp_texture[j*4 + (i * width * 4) + 1] = (noise);

temp_texture[j*4 + (i * width * 4) + 2] = (noise);

temp_texture[j*4 + (i * width * 4) + 3] = (255);

}

}

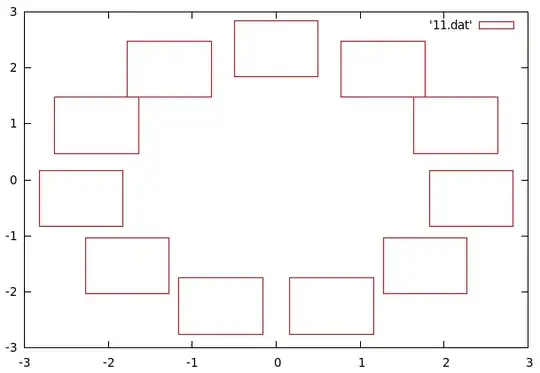

Which give good results:

But gprof is telling me that the Mersenne twister is taking up 62.4% of my time and growing with larger textures. Nothing else individual takes any where near as much time. While the Mersenne twister is fast after initialization, the fact that I initialize it every time I use it seems to make it pretty slow.

This initialization is 100% required for this to make sure that the same x and y generates the same gradient at each integer point (so you need either a hash function or seed the RNG each time).

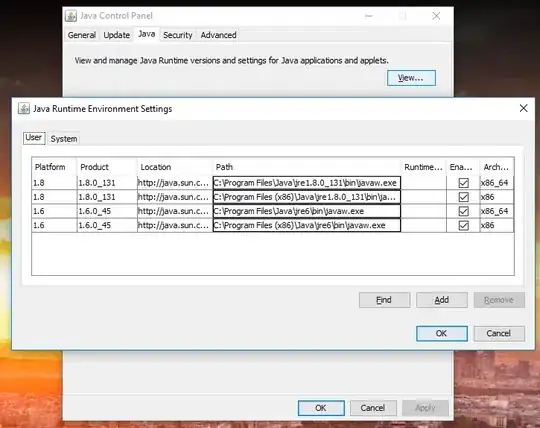

I attempted to change the PRNG to both the linear congruential generator and Xorshiftplus, and while both ran orders of magnitude faster, they gave odd results:

LCG (one time, then running 5 times before using)

Xorshiftplus

I've tried:

Running the generator several times before utilizing output, this results in slow execution or simply different artifacts.

Using the output of two consecutive runs after initial seed to seed the PRNG again and use the value after wards. No difference in result.

What is happening? What can i do to get faster results that are of the same quality as the mersenne twister?

OK BIG UPDATE:

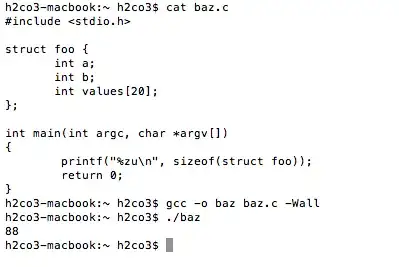

I don't know why this works, I know it has something to do with the prime number utilized, but after messing around a bit, it appears that the following works:

Step 1, incorporate the x and y values as seeds separately (and incorporate some other offset value or additional seed value with them, this number should be a prime/non trivial factor)

Step 2, Use those two seed results into seeding the generator again back into the function (so like geza said, the seeds made were bad)

Step 3, when getting the result, instead of using modulo number of items (4) trying to get, or & 3, modulo the result by a prime number first then apply & 3. I'm not sure if the prime being a mersenne prime matters or not.

Here is the result with prime = 257 and xorshiftplus being used! (note I used 2048 by 2048 for this one, the others were 256 by 256)