This part of the keras.io documentation is quite helpful:

LSTM Input Shape: 3D tensor with shape (batch_size, timesteps, input_dim)

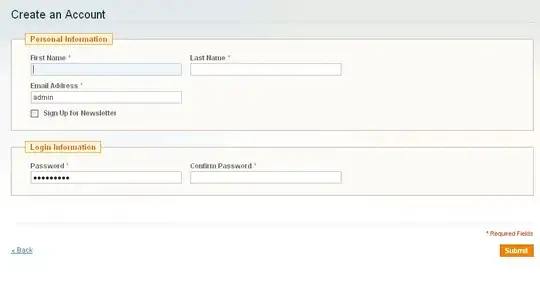

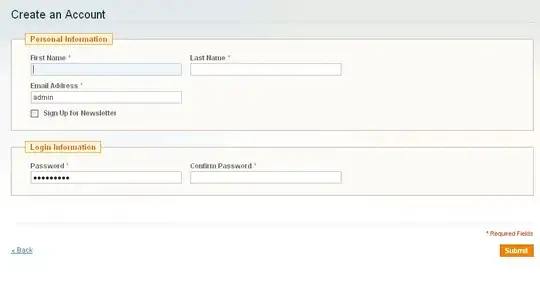

Here is also a picture that illustrates this:

I will also explain the parameters in your example:

model.add(LSTM(hidden_nodes, input_shape=(timesteps, input_dim)))

model.add(Dropout(dropout_value))

hidden_nodes = This is the number of neurons of the LSTM. If you have a higher number, the network gets more powerful. Howevery, the number of parameters to learn also rises. This means it needs more time to train the network.

timesteps = the number of timesteps you want to consider. E.g. if you want to classify a sentence, this would be the number of words in a sentence.

input_dim = the dimensions of your features/embeddings. E.g. a vector representation of the words in the sentence

dropout_value = To reduce overfitting, the dropout layer just randomly takes a portion of the possible network connections. This value is the percentage of the considered network connections per epoch/batch.

As you can see, there is no need to specify the batch_size. Keras will automatically take care of it.

optimizer = keras.optimizers.SGD(lr=learning_rate, decay=1e-6, momentum=0.9, nesterov=True)

learning_rate = Indicates, how much the weights are updated per batch.

decay = How much the learning_reate decrease over time.

momentum = The rate of momentum. A higher value helps to overcome local minima and thus speed up the learning process. Further explanation.

nesterov = If nesterov momentum should be used. Here is a good explanation.