I am reading data from excel file(which is actually a comma separated csv file) columns line-by-line, this file gets send by an external entity.Among the columns to be read is the time, which is in 00.00 format, so a split method is used read all the different columns, however the file sometimes comes with extra columns(commas between the elements) so the split elements are now always correct. Below is the code used to read and split the different columns, this elements will be saved in the database.

public void SaveFineDetails()

{

List<string> erroredFines = new List<string>();

try

{

log.Debug("Start : SaveFineDetails() - Saving Downloaded files fines..");

if (!this.FileLines.Any())

{

log.Info(string.Format("End : SaveFineDetails() - DataFile was Empty"));

return;

}

using (RAC_TrafficFinesContext db = new RAC_TrafficFinesContext())

{

this.FileLines.RemoveAt(0);

this.FileLines.RemoveAt(FileLines.Count - 1);

int itemCnt = 0;

int errorCnt = 0;

int duplicateCnt = 0;

int count = 0;

foreach (var line in this.FileLines)

{

count++;

log.DebugFormat("Inserting {0} of {1} Fines..", count.ToString(), FileLines.Count.ToString());

string[] bits = line.Split(',');

int bitsLength = bits.Length;

if (bitsLength == 9)

{

string fineNumber = bits[0].Trim();

string vehicleRegistration = bits[1];

string offenceDateString = bits[2];

string offenceTimeString = bits[3];

int trafficDepartmentId = this.TrafficDepartments.Where(tf => tf.DepartmentName.Trim().Equals(bits[4], StringComparison.InvariantCultureIgnoreCase)).Select(tf => tf.DepartmentID).FirstOrDefault();

string proxy = bits[5];

decimal fineAmount = GetFineAmount(bits[6]);

DateTime fineCreatedDate = DateTime.Now;

DateTime offenceDate = GetOffenceDate(offenceDateString, offenceTimeString);

string username = Constants.CancomFTPServiceUser;

bool isAartoFine = bits[7] == "1" ? true : false;

string fineStatus = "Sent";

try

{

var dupCheck = db.GetTrafficFineByNumber(fineNumber);

if (dupCheck != null)

{

duplicateCnt++;

string ExportFileName = (base.FileName == null) ? string.Empty : base.FileName;

DateTime FileDate = DateTime.Now;

db.CreateDuplicateFine(ExportFileName, FileDate, fineNumber);

}

else

{

var adminFee = db.GetAdminFee();

db.UploadFTPFineData(fineNumber, fineAmount, vehicleRegistration, offenceDate, offenceDateString, offenceTimeString, trafficDepartmentId, proxy, false, "Imported", username, adminFee, isAartoFine, dupCheck != null, fineStatus);

}

itemCnt++;

}

catch

{

errorCnt++;

}

}

else

{

erroredFines.Add(line);

continue;

}

}

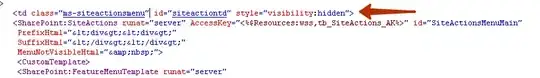

Now the problem is, this file doesn't always come with 9 elements as we expect, for example on this image, the lines are not the same(ignore first line, its headers)

On first line FM is supposed to be part of 36DXGP instead of being two separated elements. This means the columns are now extra. Now this brings us to the issue at hand, which is the time element, beacuse of extra coma, the time is now something else, is now read as 20161216, so the split on the time element is not working at all. So what I did was, read the incorrect line, check its length, if the length is not 9 then, add it to the error list and continue.

But my continue key word doesn't seem to work, it gets into the else part and then goes back to read the very same error line.

I have checked answers on Break vs Continue and they provide good example on how continue works, I introduced the else because the format on this examples did not work for me(well the else did not made any difference neither). Here is the sample data,

NOTE the first line to be read starts with 96

H,1789,,,,,,,,

96/17259/801/035415,FM,36DXGP,20161216,17.39,city hall-cape town,Makofane,200,0,0

MA/80/034808/730,CA230721,20170117,17.43,malmesbury,PATEL,200,0,0,

what is it that I am doing so wrong here