I followed a tutorial of python openCV, and was trying to use HoughLinesP() to detect lines, here is the code:

imgLoc = '...\lines.jpg'

img = cv2.imread(imgLoc)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

edges = cv2.Canny(gray, 50, 100)

minLineLength = 20; maxLineGap = 5

lines = cv2.HoughLinesP(edges, 1, np.pi/180, 100, \

minLineLength, maxLineGap)

for [[x1, y1, x2, y2]] in lines:

cv2.line(img, (x1, y1), (x2, y2), (0, 0, 255), 2)

cv2.imshow('line', img)

cv2.waitKey(); cv2.destroyAllWindows()

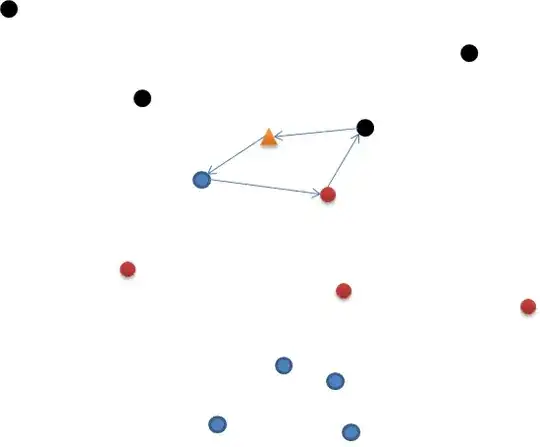

And here's what I get:

apparently very strange. there's so many lines in the sky which I never expected, and I thought there would be more vertical lines on the building, but there's not.

apparently very strange. there's so many lines in the sky which I never expected, and I thought there would be more vertical lines on the building, but there's not.

The tutorial doesn't give pictures demonstrating how the result should be, so I have no idea if that's normal or not.

So, Is there any problem in my code that lead to that wired image? If it does, can I make some change to let it detect more vertical line?

==========Update_1============

I followed the comment's suggestion, now more line can be detected:

#minLineLength = 20; maxLineGap = 5

lines = cv2.HoughLinesP(edges, 1, np.pi/180, 100, \

minLineLength = 10, maxLineGap = 5)

But still vertical lines are lacked.

And the

But still vertical lines are lacked.

And the Canny() result:

in the result of Canny() there ARE vertical edges, why would they disappeared after HoughLinesP()? (Or that's just visual error?)

==========Update_2============

I added a blur and the value of minLineLength:

gray = cv2.GaussianBlur(gray, (5, 5), 0)

...

lines = cv2.HoughLinesP(edges, 1, np.pi/180, 100, \

minLineLength = 50, maxLineGap = 5)

The result is clearer, but still not much vertical lines...

And I started to wonder where does these slashes comes from

And I started to wonder where does these slashes comes from