I run several python subprocesses to migrate data to S3. I noticed that my python subprocesses often drops to 0% and this condition lasts more than one minute. This significantly decreases the performance of the migration process.

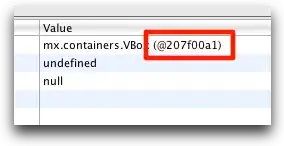

Here is the pic of the sub process:

The subprocess does these things:

- Query all tables from a database.

Spawn sub processes for each table.

for table in tables: print "Spawn process to process {0} table".format(table) process = multiprocessing.Process(name="Process " + table, target=target_def, args=(args, table)) process.daemon = True process.start() processes.append(process) for process in processes: process.join()Query data from a database using Limit and Offset. I used PyMySQL library to query the data.

Transform returned data to another structure.

construct_structure_def()is a function that transform row into another format.buffer_string = [] for i, row_file in enumerate(row_files): if i == num_of_rows: buffer_string.append( json.dumps(construct_structure_def(row_file)) ) else: buffer_string.append( json.dumps(construct_structure_def(row_file)) + "\n" ) content = ''.join(buffer_string)Write the transformed data into a file and compress it using gzip.

with gzip.open(file_path, 'wb') as outfile: outfile.write(content) return file_nameUpload the file to S3.

- Repeat step 3 - 6 until no more rows to be fetched.

In order to speed up things faster, I create subprocesses for each table using multiprocesses.Process built-in library.

I ran my script in a virtual machine. Following are the specs:

- processor: Intel(R) Xeon(R) CPU E5-2690 @ 2.90 Hz 2.90 GHz (2 Processes)

- Virtual processors: 4

- Installed RAM: 32 GB

- OS: Windows Enterprise Edition.

I saw on the post in here that said one of the main possibilities is because of memory I/O limitation. So I tried to run one sub process to test that theory, but no avail.

Any idea why this is happening? Let me know if you guys need more information.

Thank you in advance!