I want to convert Hexa value into decimal. So I have tried this. When value is >0, then it is working fine. But when value is <0, then is returns wrong value.

let h2 = “0373”

let d4 = Int(h2, radix: 16)!

print(d4) // 883

let h3 = “FF88”

let d5 = Int(h3, radix: 16)!

print(d5) // 65416

When I am passing FF88, then it returns 65416. But actually it is -120.

Right now, I am following this Convert between Decimal, Binary and Hexadecimal in Swift answer. But it didn't works always.

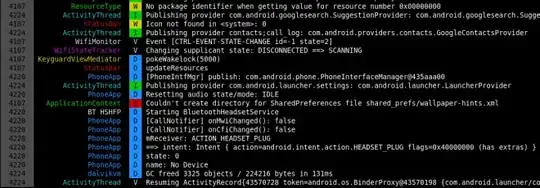

Please check this conversation online. Please see following image for more details of this conversation.

Is there any other solution for get minus decimal from Hexa value.?

Any help would be appreciated !!