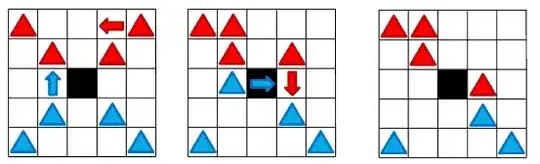

I made a small example for myself to play around with OpenCVs wrapPerspective, but the output is not completely as I expected.

My input is a bar at an 45° angle. I want to transform it so that it's vertically aligned / at an 90° angle. No problem with that. However, what I don't understand is that everything around the actual destination points is black. The reason I don't understand this is, that actually only the transformation matrix gets passed to the wrapPerspective function, not the destination points themselves. So my expected output would be a bar at an 90° angle and most around it to be yellow instead of black. Where's my error in reasoning?

# helper function

def showImage(img, title):

fig = plt.figure()

plt.suptitle(title)

plt.imshow(img)

# read and show test image

img = mpimg.imread('test_transform.jpg')

showImage(img, "input image")

# source points

top_left = [194,430]

top_right = [521,103]

bottom_right = [549,131]

bottom_left = [222,458]

pts = np.array([bottom_left,bottom_right,top_right,top_left])

# target points

y_off = 400; # y offset

top_left_dst = [top_left[0], top_left[1] - y_off]

top_right_dst = [top_left_dst[0] + 39.6, top_left_dst[1]]

bottom_right_dst = [top_right_dst[0], top_right_dst[1] + 462.4]

bottom_left_dst = [top_left_dst[0], bottom_right_dst[1]]

dst_pts = np.array([bottom_left_dst, bottom_right_dst, top_right_dst, top_left_dst])

# generate a preview to show where the warped bar would end up

preview=np.copy(img)

cv2.polylines(preview,np.int32([dst_pts]),True,(0,0,255), 5)

cv2.polylines(preview,np.int32([pts]),True,(255,0,255), 1)

showImage(preview, "preview")

# calculate transformation matrix

pts = np.float32(pts.tolist())

dst_pts = np.float32(dst_pts.tolist())

M = cv2.getPerspectiveTransform(pts, dst_pts)

# wrap image and draw the resulting image

image_size = (img.shape[1], img.shape[0])

warped = cv2.warpPerspective(img, M, dsize = image_size, flags = cv2.INTER_LINEAR)

showImage(warped, "warped")

The result using this code is:

Here's my input image test_transform.jpg:

And here is the same image with coordinates added:

And here is the same image with coordinates added:

By request, here is the transformation matrix:

[[ 6.05504680e-02 -6.05504680e-02 2.08289910e+02]

[ 8.25714275e+00 8.25714275e+00 -5.12245707e+03]

[ 2.16840434e-18 3.03576608e-18 1.00000000e+00]]