I dont know the exact meaning of 'iter_size' in caffe solver though I googled a lot. it always says that 'iter_size' is a way to effectively increase the batch size without requiring the extra GPU memory.

Could I understand it as this:

If set bach_size=10,iter_size=10, its behavior is the same as batch_size = 100.

But I do two tests on this:

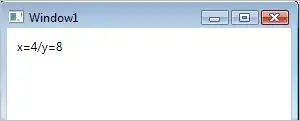

- total samples = 6. batch_size = 6, iter_size = 1, trainning samples and test samples are the same. the loss and accuracy graph :

- total samples = 6. batch_size = 1, iter_size = 6, trainning samples and test samples are the same.

from this two tests, you can see that it behaves very differently.

So I misunderstood the true meaning of 'iter_size'. But how can I do to get the behavior of gradient descent the same as over all samples rather than mini_batch?

Could anybody give me some help?