I'm trying to attempt the Titanic Kaggle competition using Tensorflow.

My pre processed train data looks like this:

data_x:

PassengerId Pclass Sex Age SibSp Parch Ticket Fare Cabin \ Embarked

1 2 1 1 38.0 1 0 500 71.2833 104

2 3 3 1 26.0 0 0 334 7.9250 0

3 4 1 1 35.0 1 0 650 53.1000 130

4 5 3 0 35.0 0 0 638 8.0500 0

data_y:

Survived

0

1

1

1

0

A softmax function should do the work to predict if a passenger survived or not since it's binary, right?

So here is how I build my model:

X = tf.placeholder(tf.float32, [None, data_x.shape[1]])

Y_ = tf.placeholder(tf.float32, [None, 1])

W = tf.Variable(tf.truncated_normal([10, 1]))

b = tf.Variable(tf.zeros([1]))

# Parameters

learning_rate = 0.001

#The model

Y = tf.matmul(X,W) + b

# Loss function

entropy = tf.nn.softmax_cross_entropy_with_logits(labels=Y_, logits=Y)

loss = tf.reduce_mean(entropy) # computes the mean over examples in the batch

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss)

acc = tf.equal(tf.argmax(Y_, 1), tf.argmax(Y, 1))

acc = tf.reduce_mean(tf.cast(acc, tf.float32))

tf.summary.scalar('loss', loss)

tf.summary.scalar('accuracy', acc)

merged_summary = tf.summary.merge_all()

init = tf.global_variables_initializer()

And finallyn, the training part:

with tf.Session() as sess:

sess.run(init)

writer = tf.summary.FileWriter("./graphs", sess.graph)

for i in range(1000):

_, l, summary = sess.run([optimizer, loss, merged_summary], feed_dict={X: data_x, Y_: data_y})

writer.add_summary(summary, i)

if i%100 == 0:

print (i)

print ("loss = ", l)

But loss is equals to 0 since the first step...

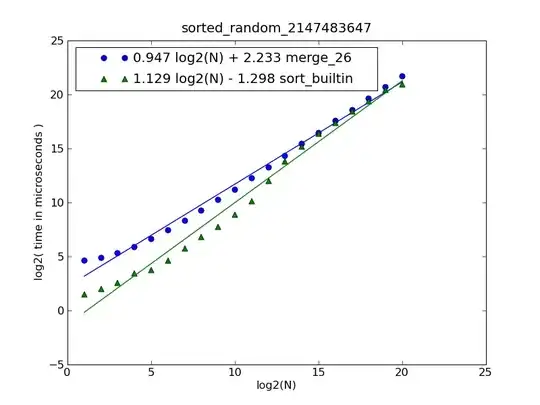

Here is Tensorboard visualization:

Any idea what's going on here?