I'm building a web app to help students with learning Maths.

The app needs to display Maths content that comes from LaTex files. These Latex files render (beautifully) to pdf that I can convert cleanly to svg thanks to pdf2svg.

The (svg or png or whatever image format) image looks something like this:

_______________________________________

| |

| 1. Word1 word2 word3 word4 |

| a. Word5 word6 word7 |

| |

| ///////////Graph1/////////// |

| |

| b. Word8 word9 word10 |

| |

| 2. Word11 word12 word13 word14 |

| |

|_______________________________________|

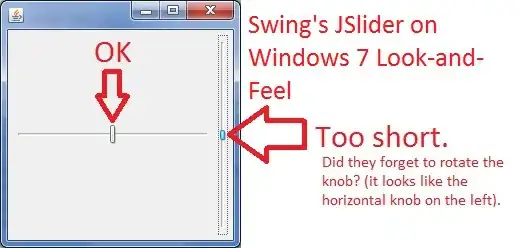

Real example:

The web app intent is to manipulate and add content to this, leading to something like this:

_______________________________________

| |

| 1. Word1 word2 | <-- New line break

|_______________________________________|

| |

| -> NewContent1 |

|_______________________________________|

| |

| word3 word4 |

|_______________________________________|

| |

| -> NewContent2 |

|_______________________________________|

| |

| a. Word5 word6 word7 |

|_______________________________________|

| |

| ///////////Graph1/////////// |

|_______________________________________|

| |

| -> NewContent3 |

|_______________________________________|

| |

| b. Word8 word9 word10 |

|_______________________________________|

| |

| 2. Word11 word12 word13 word14 |

|_______________________________________|

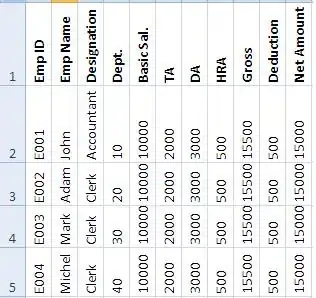

Example:

A large single image cannot give me the flexibility to do this kind of manipulations.

But if the image file was broken down into smaller files which hold single words and single Graphs I could do these manipulations.

What I think I need to do is detect whitespace in the image, and slice the image into multiple sub-images, looking something like this:

_______________________________________

| | | | |

| 1. Word1 | word2 | word3 | word4 |

|__________|_______|_______|____________|

| | | |

| a. Word5 | word6 | word7 |

|_____________|_______|_________________|

| |

| ///////////Graph1/////////// |

|_______________________________________|

| | | |

| b. Word8 | word9 | word10 |

|_____________|_______|_________________|

| | | | |

| 2. Word11 | word12 | word13 | word14 |

|___________|________|________|_________|

I'm looking for a way to do this. What do you think is the way to go?

Thank you for your help!