I am trying to extract information from a range of different receipts using a combination of Opencv, Tesseract and Keras. The end result of the project is that I should be able to take a picture of a receipt using a phone and from that picture get the store name, payment type (card or cash), amount paid and change tendered.

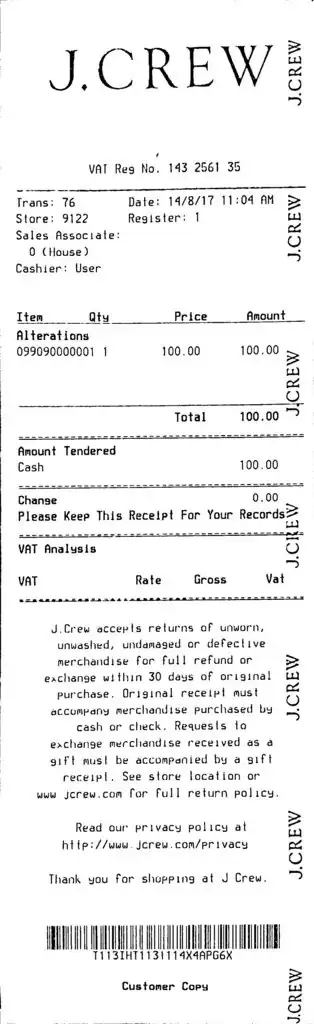

So far I have done a few different preprocessing steps on a series of different sample receipts using Opencv such as removing background, denoising and converting to a binary image and am left with an image such as the following:

I am then using Tesseract to perform ocr on the receipt and write the results out to a text file. I have managed to get the ocr to perform at an acceptable level, so I can currently take a picture of a receipt and run my program on it and I will get a text file containing all the text on the receipt.

My problem is that I don't want all of the text on the receipt, I just want certain information such as the parameters I listed above. I am unsure as to how to go about training a model that will extract the data I need.

Am I correct in thinking that I should use Keras to segment and classify different sections of the image, and then write to file the text in sections that my model has classified as containing relevant data? Or is there a better solution for what I need to do?

Sorry if this is a stupid question, this is my first Opencv/machine learning project and I'm pretty far out of my depth. Any constructive criticism would be much appreciated.