I have sparse data problems that generally require computing a sparse model matrix. The matrix I should receive in the end contains ~95% zeroes. It is usually due to factors that get one hot encoded, which become sparse, and further taking interactions with these sparse vectors.

require(Matrix)

require(data.table)

require(magrittr)

n = 500000

p = 10

x.matrix = matrix(rnorm(n*p), n, p)

colnames(x.matrix) = sprintf("n%s", 1:p)

x.categorical = data.table(

c1 = sample(LETTERS, n, replace = T),

c2 = sample(LETTERS, n, replace = T),

c3 = sample(LETTERS, n, replace = T)

)

x = cBind(x.matrix, x.categorical)

myformula = "~ n1 + n2 + n3 + n4 + n5 + n6 + n7 + n8 + n9 +n10 +

c1 + c1*c2 + c3 + n1:c1"

mm = model.matrix(myformula %>% as.formula, x)

mm2 = sparse.model.matrix(myformula %>% as.formula, x)

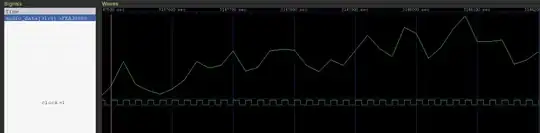

I have found that the performance of sparse.model.matrix on a sparse problem is worse than model.matrix (normally used for dense problems). This is revealed using Rstudio's profiling tools.

Here sparse model matrix takes much more time than model.matrix, and uses almost the same amount of memory. In some problems I have found sparse.model.matrix to be up to 10x slower than model.matrix when working with data that should be sparse.

Are there better ways to create the sparse matrix? I have searched quite a lot and have not found any. Alternatively, I would be interested in finding others or getting tips in how to implement a smarter version of sparse.model.matrix from scratch, perhaps using Rcpp or data.table functions

The source of the problem is in sparse2int, although I don't quite understand what it is for, and there are a few "FIXME"s still left in the code.