I am running an Airflow process with +400 tasks on an early 2015 MacBook Pro with a 3.1 GHz Intel Core i7 processor and 16GBs or RAM.

The script I'm running looks pretty much like this, with the difference that I have my DAG defined as

default_args = {

'start_date': datetime.now(),

'max_active_runs': 2

}

to try to avoid firing too many tasks in parallel. What follows are a series of screenshots of my experience doing this. My questions here are:

- This operation generates an enormous number of python processes. Is it necessary to define the entire task queue in RAM this way, or can airflow take a "generate tasks as we go" approach that avoids firing up so many processes.

- I would thought that

max_active_runscontrol how many processes are actually doing work at any given time. Reviewing my tasks though, I'll have dozens of tasks occupying CPU resources while the rest are idle. This is really inefficient, how can I control this behavior?

Here are a few screenshots:

Things get off to a good enough start, there's just a lot more processes running in parallel than I anticipated:

Everything bogs down and there's a lot of idle processes. Things seem to grind to a halt:

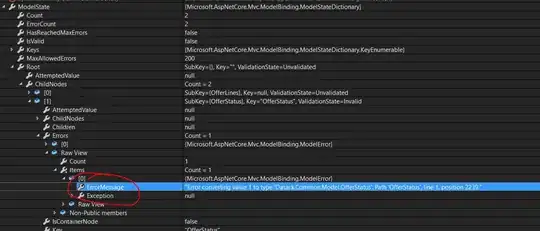

The terminal starts spitting out tons of error messages and there's a lot of process failure:

The process basically cycles through these phases until it finishes. The final task breakdown looks like this:

[2017-08-24 16:26:20,171] {jobs.py:2066} INFO - [backfill progress] | finished run 1 of 1 | tasks waiting: 0 | succeeded: 213 | kicked_off: 0 | failed: 200 | skipped: 0 | deadlocked: 0 | not ready: 0

Any thoughts?