I need to measure the duration of several queries, like this:

declare @dtStart1 as datetime;

declare @dtStart2 as datetime;

declare @dtStart3 as datetime;

declare @dtStart4 as datetime;

declare @dtStart5 as datetime;

declare @dtStart6 as datetime;

declare @dtStart7 as datetime;

declare @dtStart8 as datetime;

declare @dtStart9 as datetime;

declare @dtStart10 as datetime;

declare @duration1 as int;

declare @duration2 as int;

declare @duration3 as int;

declare @duration4 as int;

declare @duration5 as int;

declare @duration6 as int;

declare @duration7 as int;

declare @duration8 as int;

declare @duration9 as int;

declare @duration10 as int;

set @dtStart1 = (select getutcdate());

--query1

set @duration1 = (select datediff(millisecond, @dtStart1, GETUTCDATE()));

set @dtStart2 = (select getutcdate());

--query2

set @duration2 = (select datediff(millisecond, @dtStart2, GETUTCDATE()));

set @dtStart3 = (select getutcdate());

--query3

set @duration3 = (select datediff(millisecond, @dtStart3, GETUTCDATE()));

set @dtStart4 = (select getutcdate());

--query4

set @duration4 = (select datediff(millisecond, @dtStart4, GETUTCDATE()));

set @dtStart5 = (select getutcdate());

--query5

set @duration5 = (select datediff(millisecond, @dtStart5, GETUTCDATE()));

set @dtStart6 = (select getutcdate());

--query6

set @duration6 = (select datediff(millisecond, @dtStart6, GETUTCDATE()));

set @dtStart7 = (select getutcdate());

--query7

set @duration7 = (select datediff(millisecond, @dtStart7, GETUTCDATE()));

set @dtStart8 = (select getutcdate());

--query8

set @duration8 = (select datediff(millisecond, @dtStart8, GETUTCDATE()));

set @dtStart9 = (select getutcdate());

--query9

set @duration9 = (select datediff(millisecond, @dtStart9, GETUTCDATE()));

set @dtStart10 = (select getutcdate());

--query10

set @duration10 = (select datediff(millisecond, @dtStart10, GETUTCDATE()));

select @duration1 / 1000.0 as q1,

@duration2 / 1000.0 as q2,

@duration3 / 1000.0 as q3,

@duration4 / 1000.0 as q4,

@duration5 / 1000.0 as q5,

@duration6 / 1000.0 as q6,

@duration7 / 1000.0 as q7,

@duration8 / 1000.0 as q8,

@duration9 / 1000.0 as q9,

@duration10 / 1000.0 as q10;

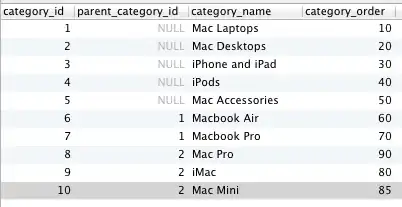

The problem is that besides the results I am actually interested in I get the results of the queries as well. I have tried using a cursor for each query, but it was instant even for long queries. Possibly it was just defined but not executed. I have tried setting FMTONLY to ON and then to OFF, but that was instant as well for long queries and has even shown the column names in the result. I would like to achieve executing the queries, getting the durations just like in the case the queries were normally unning, but not giving it back to my application server, where it will be problematic to handle the millions of records the queries might return, not to mention the huge waste of memory compared to the ideal result of getting just the one row that I am interested in, namely the results.