I am trying to blend out the seams in images I have just stitched together using the blender from OpenCV 3.2 in cv::detail::MultiBandBlender found in #include "opencv2/stitching/detail/blenders.hpp". There is not a lot of documentation and even less coding examples from what I could find but I managed to find a good blog that helped explain the steps here.

When I run the code I have I get the following error:/opencv/modules/core/src/copy.cpp:1176: error: (-215) top >= 0 && bottom >= 0 && left >= 0 && right >= 0 in function copyMakeBorder

Here is the code to for the blending (assume stitching, warpPerspective and homographies found are correct)

//Mask of iamge to be combined so you can get resulting mask

Mat mask1(image1.size(), CV_8UC1, Scalar::all(255));

Mat mask2(image2.size(), CV_8UC1, Scalar::all(255));

Mat image1Updated, image2Updated;

//Warp the masks and the images to their new posistions so their are of all the same size to be overlayed and blended

warpPerspective(image1, image1Updated, (translation*homography), result.size(), INTER_LINEAR, BORDER_CONSTANT,(0));

warpPerspective(image2, image2Updated, translation, result.size(), INTER_LINEAR, BORDER_TRANSPARENT, (0));

warpPerspective(mask1, mask1, (translation*homography), result.size(), INTER_LINEAR, BORDER_CONSTANT,(0));

warpPerspective(mask2, mask2, translation, result.size(), INTER_LINEAR, BORDER_TRANSPARENT, (0));

//create blender

detail::MultiBandBlender blender(false, 5);

//feed images and the mask areas to blend

blender.feed(image1Updated, mask1, Point2f (0,0));

blender.feed(image2Updated, mask2, Point2f (0,0));

//prepare resulting size of image

blender.prepare(Rect(0, 0, result.size().width, result.size().height));

Mat result_s, result_mask;

//blend

blender.blend(result_s, result_mask);

The error occurs when I try to do blender.feed

On a little side note; When making the masks for the blender should the mask be the entire images or just the be the area of the images that overlap one another during the stitch?

Thanks for any help in advance

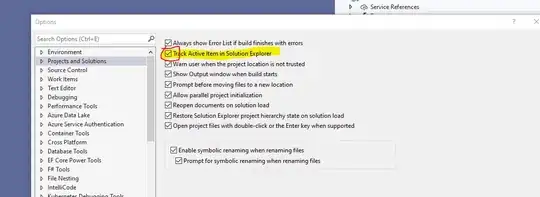

EDIT

I have it working but am now getting this resulting blended imaged.

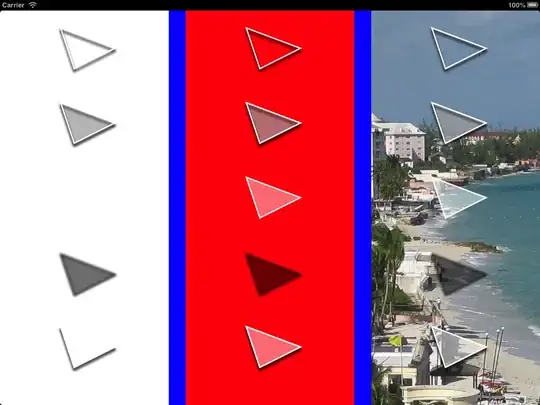

Here is the stitched image without blending for reference.

Here is the stitched image without blending for reference.  Any ideas on how to improve?

Any ideas on how to improve?