An error trap trips after running a physics simulation for about 20 minutes. Realising this would be a pain to debug, I duplicated the relevant subroutine in a new project, and called it with hard-coded copies of the original input data at the moment the error occurred. But the error trap did not trip! After two days of tedious work to isolate the exact point where the behaviours of the two instances of the subroutine diverge, I’ve traced the problem to a very simple function for computing a Cross_Product.

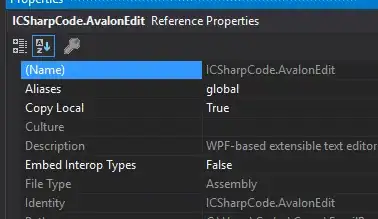

This Cross_Product function is identical in both programs. I have even checked the disassembly and made sure that the compiler is producing identical code. The function is also receiving identical input data in both cases. I have even explicitly checked the rounding mode inside the functions and they are identical. Yet, they are returning slightly different results. Specifically, the LSB is different for two out of three of the returned vector components. Even the debugger itself confirms that these two out of three variables are not equal to the expressions they’ve been explicitly assigned. (See screenshot below.)

In the original program, the debugger shows “true” in all of the last three lines of the watch list instead of only the last one.

I’m using Code::Blocks 13.12 with the GCC Compiler on XP, with an AMD Athlon 64 CPU. However, I recompiled and ran the test program in Code::Blocks 16.01 on a much more modern Windows 10 machine, with an Intel Core i5 CPU, and the results were identical.

Here is my minimal, complete, and verifiable code to reproduce the bizarre result which disagrees with my original program AND with the debugger itself (unfortunately, I can’t include the original physics program because it’s HUGE):

extern "C" {

__declspec(dllimport) int __stdcall IsDebuggerPresent(void);

__declspec(dllimport) void __stdcall DebugBreak(void);

}

struct POLY_Triplet {

double XYZ[3];

};

POLY_Triplet Cross_Product(POLY_Triplet Vector1, POLY_Triplet Vector2) {

POLY_Triplet Result;

Result.XYZ[0] = Vector1.XYZ[1] * Vector2.XYZ[2] - Vector1.XYZ[2] * Vector2.XYZ[1];

Result.XYZ[1] = Vector1.XYZ[2] * Vector2.XYZ[0] - Vector1.XYZ[0] * Vector2.XYZ[2];

Result.XYZ[2] = Vector1.XYZ[0] * Vector2.XYZ[1] - Vector1.XYZ[1] * Vector2.XYZ[0];

return Result;

}

int main() {

POLY_Triplet Triplet1;

POLY_Triplet Collision_Axis_Vector;

POLY_Triplet Boundary_normal;

*(long long int *)(&Collision_Axis_Vector.XYZ[0]) = 4594681439063077250;

*(long long int *)(&Collision_Axis_Vector.XYZ[1]) = 4603161398996347097;

*(long long int *)(&Collision_Axis_Vector.XYZ[2]) = 4605548671330989714;

*(long long int *)(&Triplet1.XYZ[0]) = -4626277815076045984;

*(long long int *)(&Triplet1.XYZ[1]) = -4637257536736295424;

*(long long int *)(&Triplet1.XYZ[2]) = 4589609575355367200;

if (IsDebuggerPresent()) {

DebugBreak();

}

Boundary_normal = Cross_Product(Collision_Axis_Vector, Triplet1);

return 0;

}

For convenience, here are the relevant lines for the watch list, as seen in the screenshot:

(Result.XYZ[0] == Vector1.XYZ[1] * Vector2.XYZ[2] - Vector1.XYZ[2] * Vector2.XYZ[1])

(Result.XYZ[1] == Vector1.XYZ[2] * Vector2.XYZ[0] - Vector1.XYZ[0] * Vector2.XYZ[2])

(Result.XYZ[2] == Vector1.XYZ[0] * Vector2.XYZ[1] - Vector1.XYZ[1] * Vector2.XYZ[0])

Can anyone please explain this behaviour?