I wrote a small function to convert a Bitmap in ARGB to Grayscale. The conversion itself works fantastic, but the results are upside down. I cannot find the mistake.

Code: #include #include

inline BYTE GrayScaleValue(BYTE* r, BYTE* g, BYTE* b) { return /*ceil*/(0.21f * (*r) + 0.72f * (*g) + 0.07f * (*b)); }

extern "C" __declspec(dllexport) HBITMAP ConvertToMonocrom(HBITMAP bmp) {

INT x = 0, y = 0;

char Gray;

BITMAP bm;

GetObject(bmp, sizeof(BITMAP), (LPSTR)&bm);

BYTE * pImgByte = (BYTE *)bm.bmBits;

INT iWidthBytes = bm.bmWidth * 4;

for (y = 0; y < bm.bmHeight; y++) {

for (x = 0; x < bm.bmWidth; x++) {

Gray = GrayScaleValue(&pImgByte[y * iWidthBytes + x * 4 + 3], &pImgByte[y * iWidthBytes + x * 4 + 2], &pImgByte[y * iWidthBytes + x * 4 + 1]);

pImgByte[y * iWidthBytes + x * 4] = Gray;

pImgByte[y * iWidthBytes + x * 4 + 1] = Gray;

pImgByte[y * iWidthBytes + x * 4 + 2] = Gray;

pImgByte[y * iWidthBytes + x * 4 + 3] = Gray;

}

}

return CreateBitmapIndirect(&bm);

}

Here is the Picture:

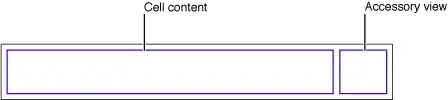

The picture after conversion, without setting A - only RGB:

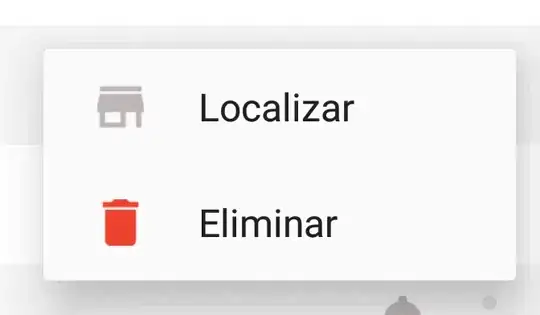

The picture after conversion, as shown in the code (with setting Alpha-Value:

Well, I don't know, why he is setting "Transparent" to black...