I have a bare-metal application running on a tiny 16 bit microcontroller (ST10) with 10BASE-T Ethernet (CS8900) and a Tcp/IP implementation based upon the EasyWeb project.

The application's main job is to control a led matrix display for public traffic passenger information. It generates display information with about about 41 fps and configurable display size of e.g. 160 × 32 pixel, 1 bit color depth (each led can be just either on or off).

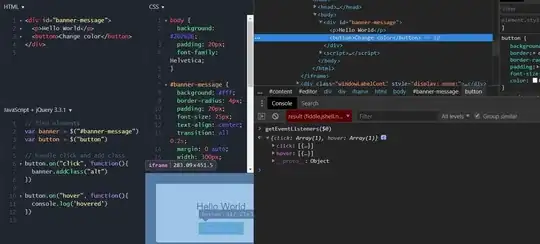

Example:

There is a tiny webserver implemented, which provides the respective frame buffer content (equals to led matrix display content) as PNG or BMP for download (both uncompressed because of CPU load and 1 Bit color depth). So I can receive snapshots by e.g.:

wget http://$IP/content.png

or

wget http://$IP/content.bmp

or put appropriate html code into the controller's index.html to view that in a web browser.

I also could write html / javascript code to update that picture periodically, e.g. each second so that the user can see changes of the display content.

Now for the next step, I want to provide the display content as some kind of video stream and then put appropriate html code to my index.html or just open that "streaming URI" with e.g. vlc.

As my framebuffer bitmaps are built uncompressed, I expect a constant bitrate.

I'm not sure what's the best way to start with this.

(1) Which video format is the most easy to generate if I already have a PNG for each frame (but I have that PNG only for a couple of milliseconds and cannot buffer it for a longer time)?

Note that my target system is very resource restricted in both memory and computing power.

(2) Which way for distribution over IP?

I already have some tcp sockets open for listening on port 80. I could stream the video over HTTP (after received) by using chunked transfer encoding (each frame as an own chunk). (Maybe HTTP Live Streaming doing like this?)

I'd also read about thinks like SCTP, RTP and RTSP but it looks like more work to implement this on my target. And as there is also the potential firewall drawback, I think I prefer HTTP for transport.

Please note, that the application is coded in plain C, without operating system or powerful libraries. All stuff is coded from the scratch, even the web server and PNG generation.

Edit 2017-09-14, tryout with APNG

As suggested by Nominal Animal, I gave a try with using APNG.

I'd extend my code to produce appropriate fcTL and fdAT chunks for each frame and provide that bla.apng with HTTP Content-Type image/apng.

After downloading those bla.apng it looks useful when e.g. opening in firefox or chrome (but not in

konqueror,

vlc,

dragon player,

gwenview).

Trying to stream that apng works nicely but only with firefox. Chrome wants first to download the file completely.

So APNG might be a solution, but with the disadvantage that it currently only works with firefox. After further testing I found out, that 32 Bit versions of Firefox (55.0.2) crashing after about 1h of APNG playback were about 100 MiB of data has been transfered in this time. Looks that they don't discard old / obsolete frames.

Further restrictions: As APNG needs to have a 32 bit "sequence number" at each animation chunk (need 2 for each frame), there might to be a limit for the maximum playback duration. But for my frame rate of 24 ms this duration limit is at about 600 days and so I could live with.

Note that APNG mime type was specified by mozilla.org to be image/apng. But in my tests I found out that it's a bit better supported when my HTTP server delivers APNG with Content-Type image/png instead. E.g. Chromium and Safari on iOS will play my APNG files after download (but still not streaming). Even the wikipedia server delivers e.g. this beach ball APNG with Content-Type image/png.

Edit 2017-09-17, tryout with animated GIF

As also suggested by Nominal Animal, I now tried animated GIF.

Looks ok in some browsers and viewers after complete download (of e.g. 100 or 1000 frames).

Trying live streaming it looks ok in Firefox, Chrome, Opera, Rekonq and Safari (on macOS Sierra). Not working Safari (on OSX El Capitan and iOS 10.3.1), Konqueror, vlc, dragon player, gwenview. E.g. Safari (tested on iOS 10.3.3 and OSX El Capitan) first want to download the gif completely before display / playback.

Drawback of using GIF: For some reason (e.g. cpu usage) I don't want to implement data compression for the generated frame pictures. For e.g. PNG, I use uncompressed data in IDAT chunk and for a 160x32 PNG with 1 Bit color depth a got about 740 Byte for each frame. But when using GIF without compression, especially for 1 Bit black/white bitmaps, it blows up the pixel data by factor 3-4.