I am using the Juce framework to build a VST/AU audio plugin. The audio plugin accepts MIDI, and renders that MIDI as audio samples — by sending the MIDI messages to be processed by FluidSynth (a soundfont synthesizer).

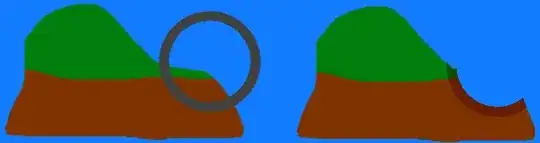

This is almost working. MIDI messages are sent to FluidSynth correctly. Indeed, if the audio plugin tells FluidSynth to render MIDI messages directly to its audio driver — using a sine wave soundfont — we achieve a perfect result:

But I shouldn't ask FluidSynth to render directly to the audio driver. Because then the VST host won't receive any audio.

To do this properly: I need to implement a renderer. The VST host will ask me (44100÷512) times per second to render 512 samples of audio.

I tried rendering blocks of audio samples on-demand, and outputting those to the VST host's audio buffer, but this is the kind of waveform I got:

Here's the same file, with markers every 512 samples (i.e. every block of audio):

So, clearly I'm doing something wrong. I am not getting a continuous waveform. Discontinuities are very visible between each blocks of audio that I process.

Here's the most important part of my code: my implementation of JUCE's SynthesiserVoice.

#include "SoundfontSynthVoice.h"

#include "SoundfontSynthSound.h"

SoundfontSynthVoice::SoundfontSynthVoice(const shared_ptr<fluid_synth_t> synth)

: midiNoteNumber(0),

synth(synth)

{}

bool SoundfontSynthVoice::canPlaySound(SynthesiserSound* sound) {

return dynamic_cast<SoundfontSynthSound*> (sound) != nullptr;

}

void SoundfontSynthVoice::startNote(int midiNoteNumber, float velocity, SynthesiserSound* /*sound*/, int /*currentPitchWheelPosition*/) {

this->midiNoteNumber = midiNoteNumber;

fluid_synth_noteon(synth.get(), 0, midiNoteNumber, static_cast<int>(velocity * 127));

}

void SoundfontSynthVoice::stopNote (float /*velocity*/, bool /*allowTailOff*/) {

clearCurrentNote();

fluid_synth_noteoff(synth.get(), 0, this->midiNoteNumber);

}

void SoundfontSynthVoice::renderNextBlock (

AudioBuffer<float>& outputBuffer,

int startSample,

int numSamples

) {

fluid_synth_process(

synth.get(), // fluid_synth_t *synth //FluidSynth instance

numSamples, // int len //Count of audio frames to synthesize

1, // int nin //ignored

nullptr, // float **in //ignored

outputBuffer.getNumChannels(), // int nout //Count of arrays in 'out'

outputBuffer.getArrayOfWritePointers() // float **out //Array of arrays to store audio to

);

}

This is where each voice of the synthesizer is asked to produce the block of 512 audio samples.

The important function here is SynthesiserVoice::renderNextBlock(), wherein I ask fluid_synth_process() to produce a block of audio samples.

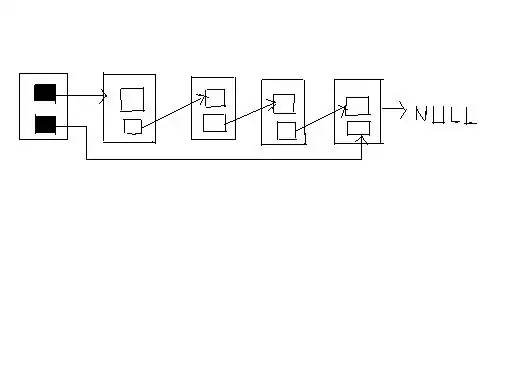

And here's the code that tells every voice to renderNextBlock(): my implementation of AudioProcessor.

AudioProcessor::processBlock() is the main loop of the audio plugin. Within it, Synthesiser::renderNextBlock() invokes every voice's SynthesiserVoice::renderNextBlock():

void LazarusAudioProcessor::processBlock (

AudioBuffer<float>& buffer,

MidiBuffer& midiMessages

) {

jassert (!isUsingDoublePrecision());

const int numSamples = buffer.getNumSamples();

// Now pass any incoming midi messages to our keyboard state object, and let it

// add messages to the buffer if the user is clicking on the on-screen keys

keyboardState.processNextMidiBuffer (midiMessages, 0, numSamples, true);

// and now get our synth to process these midi events and generate its output.

synth.renderNextBlock (

buffer, // AudioBuffer<float> &outputAudio

midiMessages, // const MidiBuffer &inputMidi

0, // int startSample

numSamples // int numSamples

);

// In case we have more outputs than inputs, we'll clear any output

// channels that didn't contain input data, (because these aren't

// guaranteed to be empty - they may contain garbage).

for (int i = getTotalNumInputChannels(); i < getTotalNumOutputChannels(); ++i)

buffer.clear (i, 0, numSamples);

}

Is there something I'm misunderstanding here? Is there some timing subtlety required to make FluidSynth give me samples that are back-to-back with the previous block of samples? Maybe an offset that I need to pass in?

Maybe FluidSynth is stateful, and has its own clock that I need to gain control of?

Is my waveform symptomatic of some well-known problem?

Source code is here, in case I've omitted anything important. Question posted at the time of commit 95605.