![Output of code[![][1]](../../images/3770878999.webp) ]2

]2

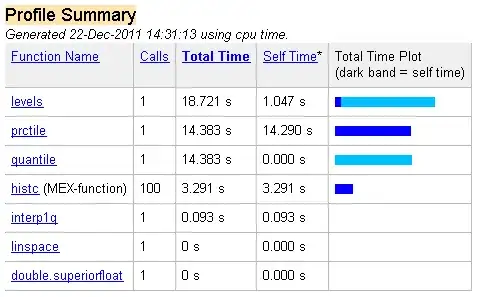

So as shown in the image below, i have key points detected on the image but the output image after wrap perspective neglects the first image on the left side, cannot figure out why !

import numpy as np

import imutils

import cv2

class Stitcher:

def __init__(self):

# determine if we are using OpenCV v3.X

self.isv3 = imutils.is_cv3()

def stitch(self, imageA,imageB, ratio=0.75, reprojThresh=10.0,

showMatches=False):

# unpack the images, then detect keypoints and extract

# local invariant descriptors from them

#(imageB, imageA) = images

(kpsA, featuresA) = self.detectAndDescribe(imageA)

(kpsB, featuresB) = self.detectAndDescribe(imageB)

# match features between the two images

M = self.matchKeypoints(kpsA, kpsB,

featuresA, featuresB, ratio, reprojThresh)

# if the match is None, then there aren't enough matched

# keypoints to create a panorama

if M is None:

return None

# otherwise, apply a perspective warp to stitch the images

# together

(matches, H, status) = M

#print(M)

#print(matches)

#print(H)

#print(status)

#cv2.imwrite('intermediate.jpg',matches)

result = cv2.warpPerspective(imageA, H,

(imageA.shape[1] + imageB.shape[1], imageA.shape[0]))

result[0:imageB.shape[0], 0:imageB.shape[1]] = imageB

#cv2.imshow('intermediate',result)

# check to see if the keypoint matches should be visualized

if showMatches:

vis = self.drawMatches(imageA, imageB, kpsA, kpsB, matches,

status)

# return a tuple of the stitched image and the

# visualization

return (result, vis)

# return the stitched image

return result

def detectAndDescribe(self, image):

# convert the image to grayscale

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# check to see if we are using OpenCV 3.X

if self.isv3:

# detect and extract features from the image

#SIFT Algorithm

descriptor = cv2.xfeatures2d.SIFT_create()

#SURF Algorithm

#descriptor = cv2.xfeatures2d.SURF_create()# 400 is hesian threshold, optimum values should be around 300-500

#upright SURF: faster and can be used for panorama stiching i.e our case.

#descriptor.upright = True

print(descriptor.descriptorSize())

(kps, features) = descriptor.detectAndCompute(image, None)

print(len(kps),features.shape)

# otherwise, we are using OpenCV 2.4.X

else:

# detect keypoints in the image

detector = cv2.FeatureDetector_create("SIFT")

kps = detector.detect(gray)

# extract features from the image

extractor = cv2.DescriptorExtractor_create("SIFT")

(kps, features) = extractor.compute(gray, kps)

# convert the keypoints from KeyPoint objects to NumPy

# arrays

kps = np.float32([kp.pt for kp in kps])

# return a tuple of keypoints and features

#print("features",features)

return (kps, features)

def matchKeypoints(self, kpsA, kpsB, featuresA, featuresB,

ratio, reprojThresh):

# compute the raw matches and initialize the list of actual

# matches

matcher = cv2.DescriptorMatcher_create("BruteForce")

rawMatches = matcher.knnMatch(featuresA, featuresB, 2)

matches = []

# loop over the raw matches

for m in rawMatches:

# ensure the distance is within a certain ratio of each

# other (i.e. Lowe's ratio test)

if len(m) == 2 and m[0].distance < m[1].distance * ratio:

matches.append((m[0].trainIdx, m[0].queryIdx))

print(len(matches))

# computing a homography requires at least 4 matches

if len(matches) > 4:

# construct the two sets of points

ptsA = np.float32([kpsA[i] for (_, i) in matches])

ptsB = np.float32([kpsB[i] for (i, _) in matches])

# compute the homography between the two sets of points

(H, status) = cv2.findHomography(ptsA, ptsB, cv2.RANSAC,

reprojThresh)

# return the matches along with the homograpy matrix

# and status of each matched point

return (matches, H, status)

# otherwise, no homograpy could be computed

return None

def drawMatches(self, imageA, imageB, kpsA, kpsB, matches, status):

# initialize the output visualization image

(hA, wA) = imageA.shape[:2]

(hB, wB) = imageB.shape[:2]

vis = np.zeros((max(hA, hB), wA + wB, 3), dtype="uint8")

vis[0:hA, 0:wA] = imageA

vis[0:hB, wA:] = imageB

# loop over the matches

for ((trainIdx, queryIdx), s) in zip(matches, status):

# only process the match if the keypoint was successfully

# matched

if s == 1:

# draw the match

ptA = (int(kpsA[queryIdx][0]), int(kpsA[queryIdx][1]))

ptB = (int(kpsB[trainIdx][0]) + wA, int(kpsB[trainIdx][1]))

cv2.line(vis, ptA, ptB, (0, 255, 0), 1)

# return the visualization

return vis

Above is the code used for key point detection and stitching,

One more question if someone can help me with vertical image stitching other than rotating images and performing horizontal stitching.

Thanks a lot !

I changed my code and used @Alexander's padtransf.warpPerspectivePadded function, to perform wrapping and blending ! Can you help me with getting the lighting uniform for the output image?