I have written an api using asp.net webapi and deployed it in azure as Appservice. Name of my controller is TestController and My action method is something like bellow.

[Route("Test/Get")]

public string Get()

{

Thread.Sleep(10000);

return "value";

}

So for each request it should wait for 10 sec before return string "value". I have also written another endpoint to see the number of threads in threadpool working for executing requests. That action is something like bellow.

[Route("Test/ThreadInfo")]

public ThreadPoolInfo Get()

{

int availableWorker, availableIO;

int maxWorker, maxIO;

ThreadPool.GetAvailableThreads(out availableWorker, out availableIO);

ThreadPool.GetMaxThreads(out maxWorker, out maxIO);

return new ThreadPoolInfo

{

AvailableWorkerThreads = availableWorker,

MaxWorkerThreads = maxWorker,

OccupiedThreads = maxWorker - availableWorker

};

}

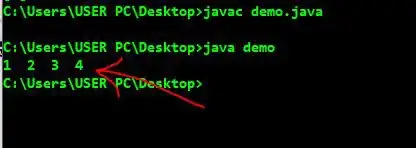

Now when We make 29 get calls concurrently to Test/Get endpoint it takes almost 11 seconds to get succeed all requests. So server executes all the requests concurrently in 11 threads. To see the threads status, making call to Test/ThreadInfo right after making call to Test/Get returns immediately(without waiting) { "AvailableWorkerThreads": 8161, "MaxWorkerThreads": 8191, "OccupiedThreads": 30 }

Seems 29 threads are executing Test/Get requests and 1 thread is executing Test/ThreadInfo request.

When I make 60 get calls to Test/Get it takes almost 36 seconds to get succeed. Making call to Test/ThreadInfo(takes some time) returns { "AvailableWorkerThreads": 8161, "MaxWorkerThreads": 8191, "OccupiedThreads": 30 }

If we increase requests number, value of OccupiedThreads increases. Like for 1000 requests it takes 2 min 22 sec and value of OccupiedThreads is 129.

Seems request and getting queued after 30 concurrent call though lot of threads are available in WorkerThread. Gradually it increases thread for concurrent execution but that is not enough(129 for 1000 request).

As our services has lot of IO call(some of them are external api call and some are database query) the latency is also high. As we are using all IO calls async way so server can serve lot of request concurrently but we need more concurrency when processor are doing real work. We are using S2 service plan with one instance. Increasing instance will increase concurrency but we need more concurrency from single instance.

After reading some blog and documentation on IIS we have seen there is a setting minFreeThreads. If the number of available threads in the thread pool falls bellow the value for this setting IIS starts to queue request. Is there anything in appservice like this? And Is it really possible to get more concurrency from azure app service or we are missing some configuration there?