I would like to perform border exchange in my mpi programm. I have structure which look like that :

cell** local_petri_A;

local_petri_A = calloc(p_local_petri_x_dim,sizeof(*local_petri_A));

for(int i = 0; i < p_local_petri_x_dim ; i ++){

local_petri_A[i] = calloc(p_local_petri_y_dim,sizeof(**local_petri_A));

}

where cell is :

typedef struct {

int color;

int strength;

} cell;

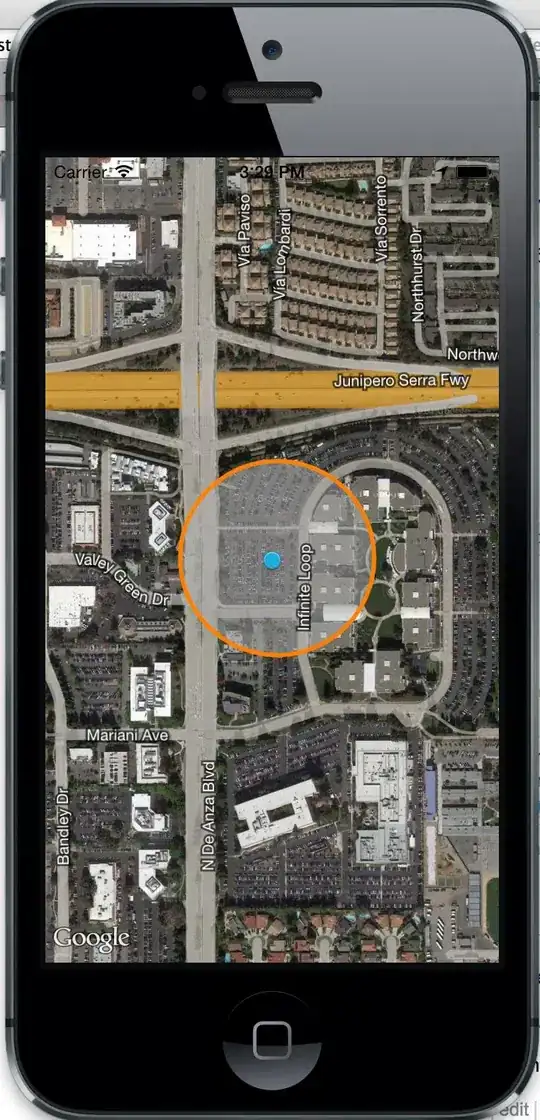

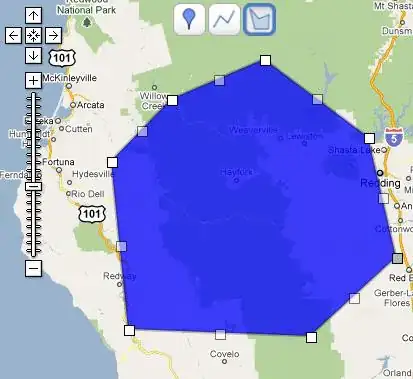

I would like to have an exchange scheme like on this picture :

So i put my program in a cartesian topology and first define mpi type to perform the exchange : void create_types(){

////////////////////////////////

////////////////////////////////

// cell type

const int nitems=2;

int blocklengths[2] = {1,1};

MPI_Datatype types[2] = {MPI_INT, MPI_INT};

MPI_Aint offsets[2];

offsets[0] = offsetof(cell, color);

offsets[1] = offsetof(cell, strength);

MPI_Type_create_struct(nitems, blocklengths, offsets, types, &mpi_cell_t);

MPI_Type_commit(&mpi_cell_t);

////////////////////////////////

///////////////////////////////

MPI_Type_vector ( x_inside , 1 , 1 , mpi_cell_t , & border_row_t );

MPI_Type_commit ( & border_row_t );

/*we put the stride to x_dim to get only one column*/

MPI_Type_vector ( y_inside , 1 , p_local_petri_x_dim , MPI_DOUBLE , & border_col_t );

MPI_Type_commit ( & border_col_t );

}

and then finally try to perform the exchange from south and north:

/*send to the north receive from the south */

MPI_Sendrecv ( & local_petri_A[0][1] , 1 , border_row_t , p_north , TAG_EXCHANGE ,& local_petri_A [0][ p_local_petri_y_dim -1] , 1 , border_row_t , p_south , TAG_EXCHANGE ,cart_comm , MPI_STATUS_IGNORE );

/*send to the south receive from the north */

MPI_Sendrecv ( & local_petri_A[0][ p_local_petri_y_dim -2] , 1 , border_row_t , p_south , TAG_EXCHANGE ,& local_petri_A [0][0] , 1 , border_row_t , p_north , TAG_EXCHANGE ,cart_comm , MPI_STATUS_IGNORE );

NB: in this section x_inside and y_inside are the "inside" dimension of the array (without ghost part) and p_local_petri_dim are dimensions of the full array.

Is there something that i've done wrong ?

Thank you in advance for your help.