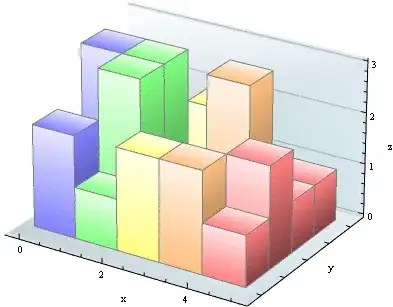

I am hoping to dummy encode my categorical variables to numerical variables like shown in the image below, using Pyspark syntax.

I read in data like this

data = sqlContext.read.csv("data.txt", sep = ";", header = "true")

In python I am able to encode my variables using the below code

data = pd.get_dummies(data, columns = ['Continent'])

However I am not sure how to do it in Pyspark.

Any assistance would be greatly appreciated.