First of all, let me point out that tf.contrib.learn.DNNRegressor uses a linear regression head with mean_squared_loss, i.e. simple L2 loss.

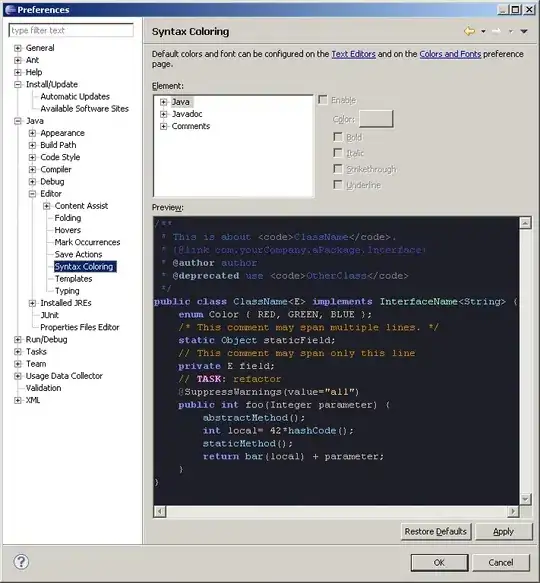

Whether it is overall loss function value (combined loss for every

step processed till now) or just that step's loss value?

Each point on a chart is the value of a loss function on the last step after learning so far.

If it is that step's loss value, then how to get value of overall loss

function and see its trend, which I feel should decrease with

increasing no of iterations?

There's no overall loss function, probably you mean a chart how the loss changed after each step. That's exactly what tensorboard is showing to you. You are right, its trend is not downwards, as it should. This indicates that your neural network is not learning.

If this is overall loss value, then why is it fluctuating so much? Am I missing something?

A common reason for the neural network not learning is poor choice of hyperparameters (though there are many more mistakes you can possibly make). For example:

- the learning rate is too large

- it's also possible that the learning rate is too small, which means that the neural network is learning, but very very slowly, so that you can't see it

- weights initialization is probably too large, try to decrease it

- batch size may be too large as well

- you're passing wrong labels for the inputs

- training data contains missing values, or unnormalized

- ...

What I usually do to check if the neural network is at least somehow working is reduce the training set to few examples and try to overfit the network. This experiment is very fast, so I can try various learning rates, initialization variance and other parameters to find a sweet spot. Once I have a steady decreasing loss chart, I go on with a bigger set.