I use my own simulation of the images as a dataset, 0-9, A-Z, nac :

- a total of 37 categories,

- there are fifteen kinds of fonts,

- each font 1000 words per character,

- a total of 509000 pictures (lack of some characters in some fonts) ,

- Of which 70% as training set, 30% as testing set.

The size of the figure is 28x28 grayscale, black background and white word.

With tensorflow mnist that handwritten recognition of the demo network (2 layers conv). Use the tf.nn.softmax_cross_entropy_with_logits to count loss.

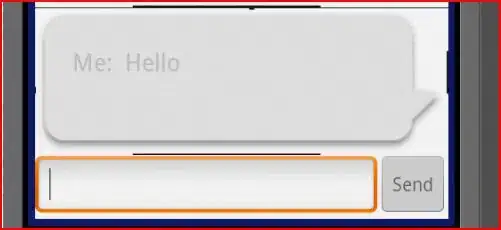

As shown in the figure, respectively, 10000 and 20000 iterations of the results, why is there such a strange situation? accuracy suddenly fall (regularly)

iteration 10000

iteration 20000